When an audio deepfake is used to harm a reputation

A school principal from Maryland was the victim of a frame job using AI technology. This case shows just how manipulated audio and other deepfakes can be — and how the AI-powered scam detection tools included with Norton 360 Deluxe can help you fight back.

Three teachers received an email with an audio recording depicting their school’s principal saying racist and antisemitic comments and disparaging other teachers. The catch! One of those three teachers had created the recording using AI and sent it anonymously. It was all to frame the principal who had concerns about his performance.

Like it came straight from a Black Mirror episode, the recording spread through social media and the principal had to endure the public’s less than compassionate scrutiny. He was sent off on paid administrative leave until the investigation concluded.

Fortunately, the detectives—which included a forensic analyst contracted by the FBI—uncovered the plot against the principal. They concluded that the recording was heavily edited and that the source was none other than the disgruntled teacher, who is now facing multiple charges.

Weaponizing AI

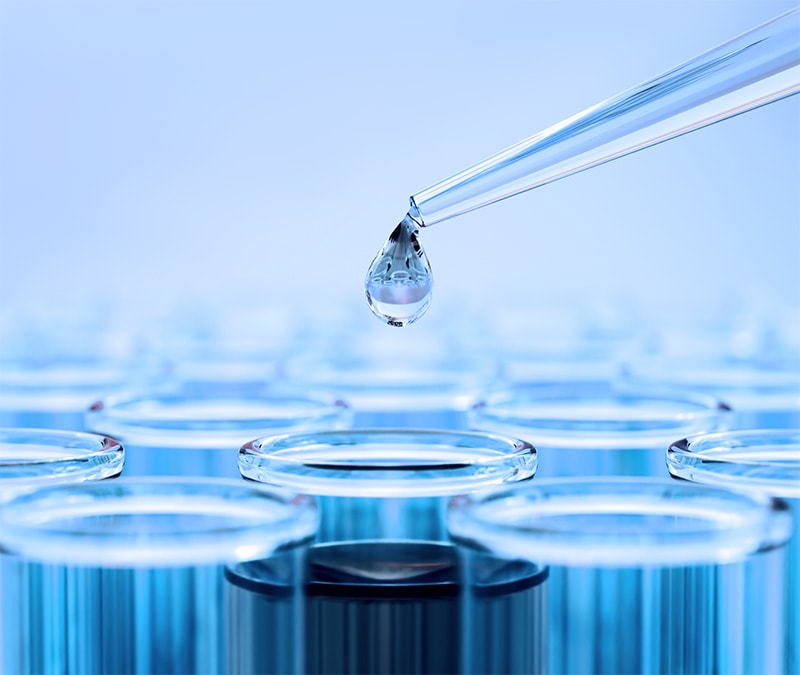

AI holds a lot of promise for many sectors, among them education and health care. It can automate routine tasks, provide personalized experiences, and even help in complex problem-solving scenarios. AI’s capacity to generate realistic content can also be used for creative purposes, such as filmmaking and virtual reality.

However, AI can be exploited. Deepfakes represent a disturbing trend where AI generated audio and videos make it seem as though someone is saying or doing things they never did. Initially, deepfakes were a cool novelty, but they’ve quickly become a tool for malicious activities, including fraud, misinformation, and personal attacks.

That’s why leading security providers are developing defenses that use AI for good to flip the script against AI threats. Norton 360 Deluxe combines advanced AI analysis with the power of your Windows AI PC to help detect deepfakes and spot video scams, before they can fool you. Other kinds of AI cyber attacks, such as AI-generated phishing emails, can help make a cybercriminal seem convincing.

Spotting the fakes

Identifying deepfakes can be tricky business, but awareness and attention to detail can help.

Here are some tips to determine if a video might be a deepfake:

- Check your sources. One of the main clues in this investigation was that the email address the audio was sent from was traced back to the perpetrator. If the source isn’t clear or suspect, it’s worth cross-checking before making assumptions and sharing, especially if what you received seems off-brand.

- Look out for inconsistencies. If it’s audio, listen for odd lagging or pronunciation. If it’s a video, look for weird eye and body movements, inconsistent lighting or background, and bad lip-syncing.

- Use cutting-edge detection tools. The powerful scam protection suite within Norton 360 Deluxe now includes Deepfake Protection, helping to flag manipulated media before it can be used against you.

Protecting yourself from starring in a deepfake

There’s no way to guarantee of never appearing on a deepfake production. However, there are things we can do to decrease the likelihood of it.

- Think before sharing. The more of your likeness and voice you share online, the more content you’re providing to bad actors. Try to limit the eyes that see your personal content, particularly if you’re front and center.

- Report deepfakes. Even if it’s a random ad on social media, report any deepfakes that misuse a person’s reputation to attack them, spread misinformation, or profit from their likeness.

- Stay informed. Keep up with the latest trends in AI and deepfake technology.

- Educate others. Share your knowledge about deepfakes with friends and family. Awareness is a powerful tool against misinformation.

Awareness and skepticism are key

As AI continues to evolve, so will the methods used to create and detect deepfakes. Maintaining a healthy skepticism and staying informed about technological advancements are your best defenses against the misuse of AI technologies. Remember to always check your sources, fact-check, and use Norton 360 Deluxe to help ensure you won’t be the next victim of an AI-generated scam.

Editorial note: Our articles provide educational information for you. Our offerings may not cover or protect against every type of crime, fraud, or threat we write about. Our goal is to increase awareness about Cyber Safety. Please review complete Terms during enrollment or setup. Remember that no one can prevent all identity theft or cybercrime, and that LifeLock does not monitor all transactions at all businesses. The Norton and LifeLock brands are part of Gen Digital Inc.

Want more?

Follow us for all the latest news, tips, and updates.