What is dark AI and how can you protect yourself?

Artificial intelligence is reshaping the world, but it has the potential for harm: AI used for malicious purposes, known as dark AI, can threaten your privacy and digital security. Learn more about tools, tactics, and cybersecurity software that can keep you safer in the age of AI.

The rise of generative AI has the potential to improve productivity, creativity, and efficiency. But AI’s abilities aren’t solely limited to achieving positive outcomes. This cutting-edge technology is also making it easier than ever to commit cybercrimes, helping scammers and hackers create and deploy fraudulent schemes that are more dangerous than ever.

Dark AI tools like FraudGPT and WormGPT are ChatGPT-esque tools designed specifically to help fraudsters create scams, malware, and more. These dark AI tools require zero technical expertise, reducing the barriers to entry to becoming a cybercriminal. With the help of dark AI, anyone can create a fraudulent scheme in seconds.

This fact is fueling increasing threats to both businesses and individuals. Since the widespread adoption of generative AI in 2023, cyberattacks have skyrocketed by 30% globally. Learning how to spot and protect against AI scams is key to keeping yourself, your devices, and your privacy and your data, safer.

What is dark AI and how does it work?

Dark AI is AI technology used for malicious or illegal activity. It’s often used to describe specific AI tools, like FraudGPT, engineered to assist with cybercrime. Dark AI is powered by generative AI, the technology behind tools like ChatGPT and Google Gemini. However, dark AI tools don’t have the same safeguards or guardrails these mainstream tools have in place to prevent malicious activity.

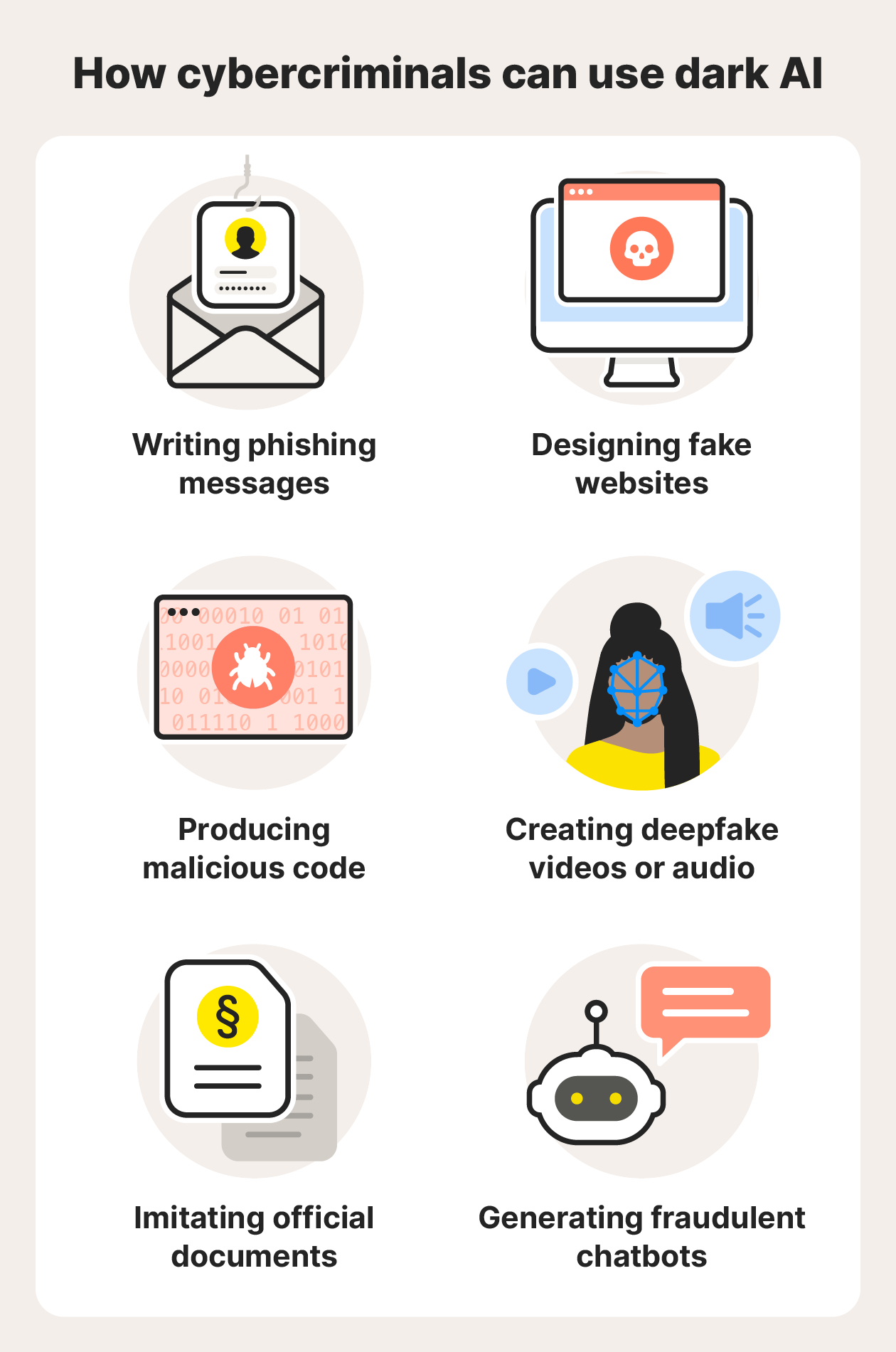

That means users can prompt dark AI tools to write phishing messages, build imposter websites, write malicious code, create deepfakes, and much more — all the building blocks of a scam or hacking attack. Plus, the speed with which AI can generate these assets means fraudsters and hackers can move faster than ever before, putting individuals and organizations at serious risk of data breaches, identity theft, and scams.

Dangers of dark AI

Dark AI is dangerous because it has the potential to greatly increase the frequency and effectiveness of cyberattacks. Dark AI attacks target both human and technical vulnerabilities, and they can be harder to detect than traditional threats.

For example, social engineering scams like phishing are becoming much harder to recognize. AI-generated phishing messages are nearly indistinguishable from authentic human-written content, allowing scammers to create highly persuasive approaches that are free from traditional warning signs like spelling or grammar errors.

Here’s a summary of some of the key dangers of dark AI:

- More hackers: Scammers no longer need to be technically skilled to perpetrate advanced attacks. With the help of dark AI, more people will be able to create scams and malware.

- More cyber attacks: AI can design elaborate attacks and social engineering campaigns in seconds. This has the potential to increase the number of attacks exponentially, saturating the internet with risks.

- Enforcement difficulties: Dark AI is developing faster than humans can keep up with it. Cybersecurity teams and law enforcement agencies are ill-prepared to handle the scale and sophistication of these attacks.

- Automated social engineering: Like ChatGPT, Dark AI tools can have human-like conversations with users. Scammers can train AI programs to scrape web data on a specific person, turn it into a personalized phishing message (emulating an email from their boss, for example), and continue the conversation realistically if the target replies.

- AI-generated malware: Dark AI can create complex and dangerous malware and computer viruses, like spyware or ransomware, at the click of a button.

- Deepfakes and voice cloning: Dark AI can create convincing audio and video clips of real people, often known as deepfakes, with just a reference image or audio snippet. These deepfakes can be used to manipulate victims and spread misinformation.

- Bypassing cybersecurity: Dark AI tools can test security systems and design sophisticated attacks to bypass them. This may reduce the effectiveness of firewalls and malware detection software.

Common dark AI tools

Dark AI tools are AI programs engineered to help users commit crimes. The number of dark AI tools is growing along with the popularity and sophistication of generative AI. In 2024, conversations about dark AI tools increased by over 200% on cybercrime forums.

Well-known dark AI tools include:

- FraudGPT: This generative dark AI program was designed to help users commit fraud, identity theft, and cybercrime. It’s often used to create malicious code and phishing messages, and licenses are sold on the dark web.

- WormGPT: WormGPT is like ChatGPT with all of its safeguards removed. It’s popular with cybercriminals for generating social engineering attacks and malicious code.

- PoisonGPT: PoisonGPT is an AI tool created to trick unsuspecting users into spreading misinformation. This type of dark AI tool doesn’t attack people — it attacks other AI tools by injecting malicious or misleading data into an AI’s training material to perpetuate misinformation, resulting in seemingly normal AI models providing misleading info when asked about certain topics.

- FreedomGPT: FreedomGPT is an uncensored generative AI program (like ChatGPT without restrictions). It’s not considered a full dark AI, as it’s available on the Google Play Store and App Store. While it wasn’t created for malicious purposes, its apparent lack of security guardrails has raised concerns.

How to protect yourself from dark AI

Protecting yourself from dark AI threats requires a combination of threat awareness and cybersecurity software, such as antivirus protection. This is because some AI attacks happen behind the scenes, infecting your devices with malware, while others happen in the open, manipulating you into giving up your personal info.

While dark AI scams will become difficult to avoid, you can help protect against them by practicing the following cyber hygiene habits.

- Use advanced scam detection software: New cybersecurity technology uses helpful AI to fight back against harmful AI. These tools, such as Norton Genie Scam Detector, can use AI to automatically detect phishing scams, malware, and other threats.

- Don’t respond to unsolicited messages: As phishing attacks increase, you should consider every unsolicited message as a potential threat. Don’t respond to messages from senders you don’t recognize. If someone you know asks for money or personal info, confirm the legitimacy of the message via another communication channel before taking any action.

- Use strong and unique passwords: Dark AI tools can crack passwords with ease. In tests, they cracked over 50% in less than a minute and over 80% in under a week. Create strong, complex passwords and save them using a password manager to protect yourself from automated attackers.

- Enable multi-factor authentication (MFA): Multi-factor authentication requires you to input more than one form of verification when logging into your accounts (such as a password and a code sent via SMS). If a dark AI attack cracks your password, MFA could help keep a hacker out of your account.

- Stay informed about new scams: Staying up to date on scammers’ latest schemes can help you avoid their tricks, and it doesn’t take much effort. Browsing a threat report every few months is a good way to stay informed.

- Be cautious of deepfakes and voice impersonation: It’s one thing to be cautious of text-based communication, but dark AI presents audio and video threats, too. Dark AI programs may be able to imitate the voices of people you know and replicate real people in fake videos.

- Avoid oversharing personal information online: Don’t share any personal information about yourself or loved ones online, especially if it can be used to identify you. Dark AI tools can scrape benign information to profile you and target you in sophisticated attacks.

- Install and update antivirus and anti-malware tools: As dark AI attacks become more advanced, so does antivirus software. Install advanced cybersecurity software like Norton 360 Deluxe that includes Deepfake Detection and other AI-powered scam protection features to help defend against the latest ploys.

Don’t let dark AI catch you off guard

Dark AI attacks are poised to threaten devices, lure victims into scams on social media, and even infect helpful AI tools with malicious data. But you don’t have to fall victim to the rise of dark AI.

Stay informed of the latest online scams and download cybersecurity software that offers protection against emerging dark AI threats, such as Norton 360 Deluxe. Norton 360 Deluxe combines award-winning malware and virus defenses with cutting-edge Deepfake Detection and other AI-powered scam protection features to help keep you and your devices safe.

FAQs

Is all AI dangerous?

No, most AI tools have built-in safeguards and content moderation policies to prevent malicious activity and misinformation. However, dark AI tools are created for malicious purposes like spreading malware and building social engineering campaigns. Dark AI is dangerous to individuals and organizations, but keeping up to date with the latest cybersecurity threats and investing in protection can help you avoid the dangers of AI.

Is AI a scam?

No, AI is legitimate technology powered by machine learning algorithms. AI tools use advanced pattern recognition to generate original content, complete tasks, and solve problems. However, AI can be used to perpetrate scams. For example, hackers can use it to write malicious code, create malware, or automate phishing attacks. AI tools used for malicious intent are known as dark AI.

Why do criminals use AI?

Criminals use AI to increase their productivity and efficiency, especially when committing cybercrimes. AI helps them create and perpetrate scams at scale by automating criminal activity. For example, criminals can launch AI-assisted cyberattacks that generate fake texts and write malicious code in seconds. Before AI, they had to create that content from scratch. With dark AI tools, criminals can increase the frequency and effectiveness of their attacks.

Editorial note: Our articles provide educational information for you. Our offerings may not cover or protect against every type of crime, fraud, or threat we write about. Our goal is to increase awareness about Cyber Safety. Please review complete Terms during enrollment or setup. Remember that no one can prevent all identity theft or cybercrime, and that LifeLock does not monitor all transactions at all businesses. The Norton and LifeLock brands are part of Gen Digital Inc.

Want more?

Follow us for all the latest news, tips, and updates.