AI cyberattacks: How they work and how to protect yourself

AI is a game-changer for cybercriminals, giving hackers faster, smarter, and stealthier ways to strike. With generative AI, it’s possible to create polished phishing scams, malware, and impersonation campaigns that slip past traditional defenses. Keep reading to learn about emerging AI threats and how you can help protect yourself with AI-ready cybersecurity from Norton.

AI is taking over the world of cybercrime. Since the launch of popular large language models (LLMs) like ChatGPT, hackers have been using AI to design and automate attacks, including deepfakes, social engineering campaigns, AI malware, and more.

Just months after ChatGPT launched in 2022, research showed that ransomware attacks spiked by 91% compared to the previous year. And that trend continues today, with AI ransomware like Magnibar contributing to a 50% increase in attacks in the final three months of 2024 alone.

Social engineering attacks are also increasingly AI-driven. Around 40% of phishing emails targeting businesses are now AI-generated, and the use of deepfakes has spiked by over 2100% since the mainstream adoption of AI.

What is an AI cyberattack?

An AI cyberattack is a form of cybercrime that leverages artificial intelligence. Hackers use AI to increase the speed, scale, and effectiveness of their operations: writing malicious code, launching automated social engineering campaigns, and identifying and exploiting security vulnerabilities faster than before.

AI has also given rise to entirely new attack types, such as “adversarial attacks,” where threat actors inject corrupted or misleading data into AI systems to manipulate their outputs. These attacks can spread misinformation, disrupt digital services, or erode public trust in AI technologies.

As AI becomes more deeply entwined into daily life, these threats are making online environments more difficult to secure by traditional means — highlighting the growing need for AI-aware cybersecurity tools and smarter digital hygiene to help users detect deception and stay protected.

Examples of AI cyberattacks

Hackers are using AI in increasingly clever ways to manipulate both systems and people. But staying informed can help you stay one step ahead of AI scams and other AI-powered cyberattacks.

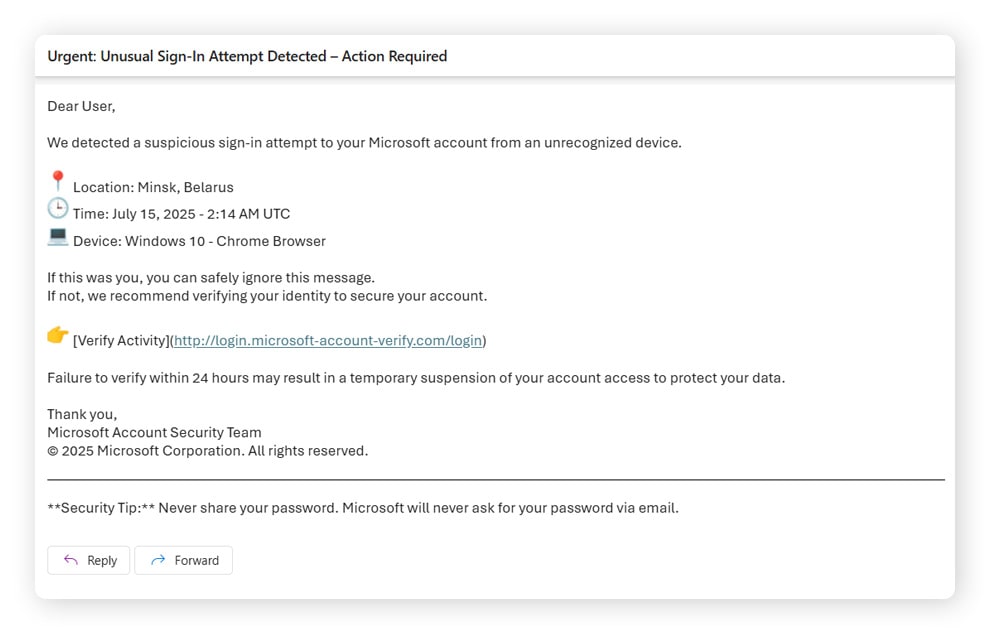

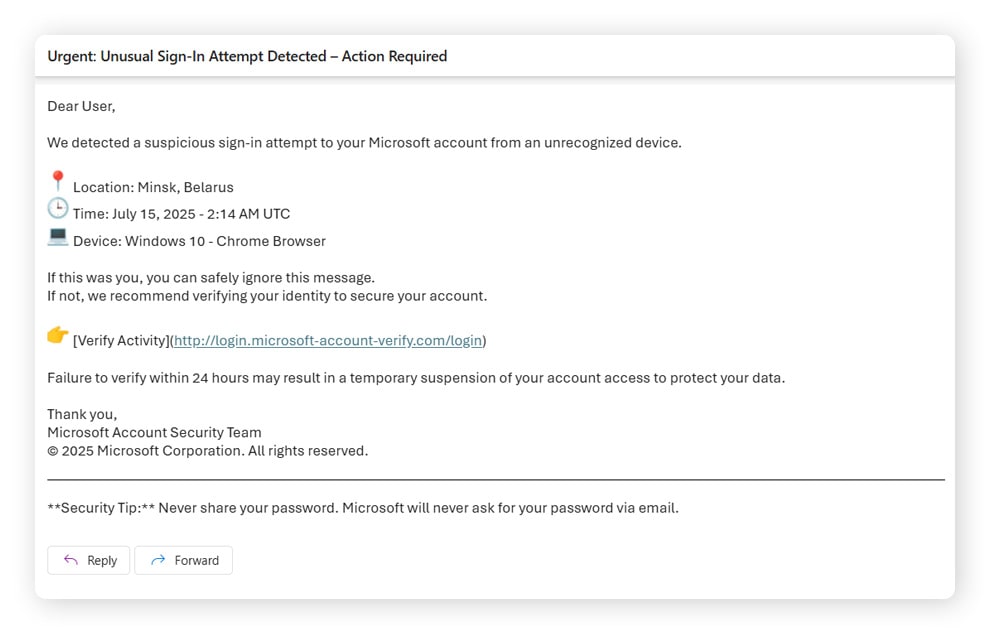

AI-generated phishing attacks

In an AI phishing attack, hackers use generative AI tools like WormGPT (or similar dark AI tools) to craft convincing phishing messages at scale. These messages are designed to impersonate trusted people or organizations, such as banks, to trick users into giving away their money or personal information.

Unlike traditional phishing attempts, which are often riddled with typos or awkward language, these messages are typically grammatically correct, highly convincing, and often tailored to the target. They can take the form of emails, “smishing” texts, social media messages, or even direct messages on collaboration platforms.

The FBI has warned that these AI-enhanced scams represent the most sophisticated phishing attacks seen to date. The Hoxhunt Phishing Trends Report highlights this notable shift: since the start of the AI explosion, there has been a 49% rise in phishing emails capable of bypassing traditional spam filters.

Video deepfakes

A video deepfake is a highly convincing, AI-generated impersonation of a real person. These manipulated videos are designed to mislead, often portraying public figures saying or doing things they never did. Because they look authentic, deepfakes are powerful tools for spreading misinformation and perpetrating scams.

Scammers frequently use deepfakes of celebrities and business leaders to promote fake giveaways, investment schemes, or crypto fraud. For instance, Elon Musk has been deepfaked in thousands of online ads and livestreams — some viewed by tens of thousands of people — promising fraudulent returns on cryptocurrency. These scams have fueled billions in losses.

Although Neural Processing Unit (NPU) deepfake detection technology is improving, deepfakes remain hard to contain and can spread rapidly across platforms before detection systems catch up. Staying alert to telltale signs like awkward speech patterns, mismatched lip-syncing, or too-good-to-be-true offers can help you spot a deepfake before it fools you.

Audio deepfakes

Audio deepfakes are AI-generated voice clones that mimic real people. With as little as three seconds of recorded audio, attackers can convincingly replicate someone’s voice and make it say anything they choose. These synthetic voices are increasingly used in impersonation scams, including fake calls from loved ones, co-workers, or financial institutions.

A high school principal in Maryland was a recent victim of an audio deepfake that falsely portrayed him making racist and antisemitic remarks. Though the fake was exposed and the creator caught, the clip still spread widely, damaging his reputation and showing how audio deepfakes can cause lasting harm, even after they’re proven false.

AI-generated malware

As well as producing plain text and images, generative AI can also write code, enabling cybercriminals to create malware. Dark AI tools like FraudGPT and WormGPT are designed specifically for this purpose, helping even rookie attackers craft sophisticated types of malware with minimal effort.

One recent example of AI-generated malware is ToxicPanda, a type of spyware that disguises itself as legitimate apps. On a device, it appears to be a dating app or even Google Chrome. But once installed, it can bypass system monitoring tools commonly used by banking apps and initiate unauthorized payments.

AI-supported ransomware attacks

AI ransomware is a type of AI malware that locks or encrypts a victim’s data and demands payment to release it. What sets it apart is the use of artificial intelligence to identify high-value data, adapt encryption techniques in real time, and evade traditional ransomware defenses.

Ransomware statistics show that this emerging threat has become one of the most aggressive forms of AI-driven cybercrime. In December 2024, a single hacker group, FunkSec, claimed responsibility for more than 85 AI-enabled ransomware attacks. And this is reflected on the macro level too, with AI-powered ransomware attacks rising by over 125% during the first three months of 2025.

AI social media attacks

AI social media attacks use AI-generated content to scam or mislead users on platforms like Facebook, Instagram, and TikTok. These social media threats can come in the form of deepfake videos, fake AI-generated images, scam ads, and phishing messages.

In 2024, scammers ran a series of deceptive Facebook ads featuring a deepfake of Taylor Swift, falsely promoting a free cookware giveaway. Fans who clicked the link unknowingly handed over their personal and financial information to cybercriminals.

What makes these attacks even more dangerous and difficult to spot is that not all AI-generated content violates platform rules, and some misleading posts remain online because they technically don’t break the law. In fact, researchers found that Facebook’s algorithm was amplifying AI spam, unintentionally driving users toward deceptive and fake websites.

AI hacking occurs when cybercriminals manipulate AI tools to produce harmful, biased, or misleading output; a tactic known as an adversarial attack. Hackers do this by feeding corrupted or malicious data into the AI system, causing it to bypass guardrails or behave unpredictably.

Just a few manipulated inputs can trick AI into generating false information, skipping safety checks, or misidentifying threats. This puts users at risk of exposure to scams, misinformation, or malicious code disguised as legitimate output. And as AI adoption grows, so does the potential for attackers to exploit these vulnerabilities at scale.

How to spot AI attacks

AI attacks can unfold on your phone, social media accounts, inbox, and even on AI platforms themselves. But you can help avoid getting scammed if you know what to look for.

Watch for these warning signs to help protect yourself from AI-driven cyberattacks:

- Unusual language: If a video or message feels too formal, awkward, or just isn’t how the person usually speaks or writes, it might be an AI impersonation.

- Hyper-personalized information: Scammers may use AI to scrape your social media and insert personal details into messages to build false trust. For example: “I loved your post about your dog Max’s surgery!”

- Robotic voice or inconsistent tone: AI-generated voices often lack natural rhythm or emotion. If a voice sounds robotic, flat, or slightly off, it could be a deepfake.

- Voice doesn’t sync with video: If a person’s mouth doesn’t sync with the sound in a video, this is a classic sign of a deepfake.

- Imperfections in images: Look for common AI image flaws such as blurry or gibberish text, overly smooth or plastic-looking skin, warped backgrounds, asymmetrical facial features, or inconsistent lighting.

- Get-rich-quick promises: Be skeptical of messages promoting cryptocurrency, giveaways, or fast cash, especially if they feature celebrity endorsements.

- Third-party AI apps: Stick with well-known platforms like ChatGPT, Claude, and Gemini, and avoid unverified AI tools, which may harbor malware or harvest your data

- Urgent appeals to emotion: Like many internet scams, AI attacks often urge you to act now. If a message claims a loved one is in danger or you’ve got mere minutes to avoid missing out on a deal, it’s likely a trap.

- Links and QR codes: Malicious QR codes and links are how hackers move you from safe spaces to malicious ones online. Never click or scan one unless you’re certain it’s safe.

How AI is changing the way cybercriminals operate

AI attacks represent a major shift in cybercrime, making it easier, faster, and more effective for hackers to launch large-scale, sophisticated threats. With AI, cybercriminals can automate tasks that once demanded coding expertise and hours of manual work, allowing them to scale operations and slip past traditional defenses with alarming ease.

The result is a surge of more scalable, persistent, and potent cyber threats, with roughly 1 in 3 attempts at AI-driven fraud proving successful. This places greater strain on security professionals and law enforcement, and forces everyday users to take steps to protect themselves.

Here are a few ways that AI is helping cybercrime evolve:

- Eliminates some signs of cybercrime: In the past, hackers often gave themselves away with awkward phrasing, poor coding, or clumsy impersonations. AI removes many of those errors, making fake content more polished, realistic, and harder to detect.

- Malware code generation: AI can generate fully functional malware in seconds, allowing even low-skilled criminals to produce ransomware, spyware, and other malicious tools with ease.

- Responsive code that bypasses defenses: AI-generated malware can adjust itself to different digital environments, helping it evade more traditional antivirus software, firewalls, and spam filters.

- Real-time deepfakes: AI can generate deepfake videos and audio in real time, allowing hackers to livestream, call, and videochat while impersonating someone else.

- Dark LLMs: Dark AI LLMs like FraudGPT and DarkBart are dangerous AI tools that are purpose-built for cybercrime, offering ChatGPT-level productivity assistance in creating phishing, malware, and other attacks.

- AI password cracking: AI-enhanced brute-force tools can analyze user behavior and password patterns, making them far more efficient at guessing and cracking login credentials.

- Hyper-personalized phishing: AI tools can crawl social media pages for personal information to use in phishing messages, adding a new level of perceived trust to scam messages.

How to help protect yourself from AI cyberattacks

AI threats are a major concern for businesses and individuals. But, there are ways to protect yourself, from practicing basic cyber hygiene to using the latest AI-driven cybersecurity protection.

What the experts say

"Our mission is to stay one step ahead, using AI for protection rather than deception." - Gen Newsroom, 2025

Siggi Stefnisson, Cyber Safety Chief Technology Officer

Here’s how to stay safer in the age of AI cyberattacks:

- Verify information and sources: If you receive a strange or urgent message, video, or call from someone you know, confirm it through another channel. For example, if you get an unusual email, call or text the person directly to verify.

- Hide your social media: Restrict public access to your social media profile to help prevent AI bots from scraping your page for personal info that could be used to spear phish you.

- Activate two-factor authentication: Enable two-factor authentication to help secure your banking, email, and social media accounts, even if your password is compromised.

- Use watermarks on public-facing videos: Add subtle but unique watermarks to your videos to make it easier to identify deepfakes or unauthorized use of your content.

- Track data leaks: Use breach-monitoring tools to alert you if your personal data has been exposed. AI tools can sort and weaponize leaked data quickly, so early detection is key.

- Get AI-driven scam protection: Use cutting-edge cybersecurity software with built-in AI scam detection. Tools like Norton Genie have achieved over 90% accuracy in detecting AI-generated threats.

- Limit permissions on apps and browsers: Review and restrict access to your device’s camera, microphone, contacts, and files to prevent exploitation by AI-enabled malware.

- Update your software regularly: Install system and app updates as soon as they’re available to benefit from security patches that protect against the latest exploits.

Fight AI threats with AI protection

AI is transforming cybercrime, making scams faster, smarter, and harder to detect. But it can also be your best line of defense. Norton 360 Deluxe is a comprehensive cybersecurity suite, built to outsmart today’s most deceptive attacks, combining powerful antivirus protection with advanced AI-driven scam detection to help identify and block threats before they can harm you.

FAQs

What are the threats of AI cyberattacks?

AI cyberattacks represent a diverse range of threats, including AI-generated malware (like ransomware and spyware), social engineering attacks (such as phishing, deepfakes, and voice cloning), adversarial attacks targeting AI systems themselves, and the rapid spread of misinformation. The ability to produce text, code, images, video, and audio makes generative AI a powerful tool for hackers to streamline and scale their attacks.

How bad is AI for the world?

AI isn’t inherently bad. While it can be misused and has been criticized for its environmental impact, it also brings major benefits like boosting productivity, enabling medical advances, and enhancing communication. Like any tool, AI’s impact depends on how it’s used and the intentions behind it.

What’s the risk of misinformation in AI?

The spread of misinformation is one of the risks associated with AI. Because of the way LLMs work, they have a tendency to “hallucinate,” producing plausible but false content. Such tools can also be used by bad actors to deliberately spread fake news, scams, or propaganda that’s difficult to distinguish from the truth.

Is AI safe to use?

Yes, AI tools are generally safe to use, especially on well-known platforms like ChatGPT and Gemini, which have strong content moderation and security policies. However, third-party AI tools without proper safeguards may expose users to misinformation or data security risks. Always use reputable platforms and avoid sharing sensitive information.

Editorial note: Our articles provide educational information for you. Our offerings may not cover or protect against every type of crime, fraud, or threat we write about. Our goal is to increase awareness about Cyber Safety. Please review complete Terms during enrollment or setup. Remember that no one can prevent all identity theft or cybercrime, and that LifeLock does not monitor all transactions at all businesses. The Norton and LifeLock brands are part of Gen Digital Inc.

Want more?

Follow us for all the latest news, tips, and updates.