AI scams: What are they and how can you avoid them?

Artificial intelligence (AI) is making it easier than ever for scammers to commit fraud. AI-assisted scams like deepfakes are on the rise, and they’re becoming harder to detect. Read on to learn about the most common AI scams and how to detect them. Then get an industry-leading online security tool to help detect deepfakes and AI scams, and block other online threats.

AI is already transforming the way we interact with technology. Unfortunately, scammers and other bad actors have been abusing powerful AI tools to hack, spread misinformation, and carry out scams on a bigger scale than ever before.

In this article, you’ll learn how to identify the most common AI scams and how to protect yourself from the potential dangers posed by AI-assisted fraud.

What are AI scams?

AI scams are scams committed with the help of artificial intelligence technology. Hackers and scammers use illicit dark AI tools to help them commit complex crimes at scale, leveraging deepfakes, AI voice clones, and AI phishing attacks to steal personal information and defraud innocent internet users.

AI scams are difficult to detect by victims and law enforcement, and around 1 in 3 instances of fraud involving AI is successful. The first line of defense against artificial intelligence scams is knowledge — knowing how to detect scammers’ tricks when you see them.

Why are AI scams so dangerous?

AI scams are particularly unsafe because they’re so hard to detect — scammers can now use AI tools that make their schemes more convincing. AI is also dangerous in the hands of scammers, because it allows scams to be launched in higher numbers and across multiple channels more efficiently.

AI scams are more sophisticated than ever before. Deepfakes and AI voice-clones (an AI impersonation of someone’s face or voice) are making it easier for scammers to impersonate and manipulate their victims. According to research from the digital identity firm Signicat, Deepfakes have surged by over 2,100% since generative AI went mainstream in 2022.

Tech companies and law enforcement are scrambling to protect users from AI threats, but AI technology is moving quickly, and it’s tough to keep pace with. There’s no denying that we’re entering an age of unprecedented advancement, but we also need to be aware of the dangers.

Common types of AI scams

Learning how to identify common AI scams is one of the best ways to protect yourself from becoming a victim. Below are some of the most popular tricks in an AI scammer’s toolkit.

1. AI voice cloning

AI voice cloning happens when a scammer imitates someone’s voice with the help of AI technology. Also known as vishing (a portmanteau of “voice” and “phishing”), scammers imitate voices to manipulate their victims into sending money or personal information.

For example, a scammer might imitate the voice of your boss and call you asking to confirm sensitive company data. Or, they may imitate a loved one and call asking for urgent financial assistance.

Did you know? Scammers only need 3 seconds of audio to accurately clone your voice. Then, they can communicate, impersonating you, with striking accuracy.

In 2023, Arizona mom Jennifer DeStefano received a call from her “daughter,” Brie, who said she’d been kidnapped. “The voice sounded just like Brie’s, the inflection, everything,” said DeStefano. Scammers made a $1M ransom demand, which DeStefano luckily did not pay before the ruse was revealed.

To avoid falling for an AI phone scam, don’t panic if a loved one or coworker calls you with an urgent or scary demand. Hang up the phone and call them via a different communication channel to confirm the first call was legit. It’s also a great idea to have a safe phrase, word, or question that only you and your loved ones know — if you’re targeted, use the agreed-upon term.

2. Deepfake scams

Deepfake scams are a type of AI fraud where a scammer creates a digital double of someone else on video. These videos can mimic a person’s appearance and voice almost perfectly.

Deepfake scams can use pre-recorded or live videos, so victims may think they're video calling with a friend or colleague when they’re actually talking to a scammer. Scammers also commonly deepfake celebrities and influential figures.

In one recent case, a deepfaked Musk encouraged retired nurse Joseph Ramsubhag to buy into a cryptocurrency scam. Over time, the scammer updated him about his growing wealth, which encouraged him to invest hundreds of thousands more into the con. When Ramsubhag tried to sell, he soon learned his money was gone.

Deepfakes like these are growing increasingly common, and they are expected to balloon fraud losses to over $40 billion within the next few years.

To protect yourself, be wary of promises that are too good to be true — especially if the communication comes from celebrities. And if you receive an urgent video call or message from someone you know, verify their identity by calling them on a different platform before taking any action.

For added protection, turn to the Scam Protection suite included with Norton 360 Deluxe. Powered by advanced AI, it analyzes patterns and video cues to help detect deepfakes and other sophisticated scams — so you can spot threats early and shut them down before they do harm.

3. AI-powered bots

AI bots are online chatbots that try to manipulate you into taking some action, such as buying into a scam, giving away your personal info, or clicking malicious links. These bots are programmed to have human-like conversations, but they’re powered by generative AI tools.

AI chatbots often imitate people and organizations you trust, such as your bank’s tech support or even a romantic match on a dating site. They can also engage with you on social media by liking and commenting on your content before reaching out in a DM.

AI chatbots are everywhere on the internet. Microsoft claims to block about 1.6 million of them every hour from signing up for Microsoft accounts, but many more are likely slipping through in other areas and on different sites.

To protect yourself, don’t give out any personal info via chat, even if you think you’re chatting with someone you trust. And never click links sent via chat, email, or text, especially if they come from an unknown sender.

4. AI-generated fake websites

Scammers are using AI to build fake websites that mimic real businesses, government pages, the media, and more. In the past, it took time to create a detailed web page. But now, scammers can use AI to generate images, product descriptions, and even customer reviews in minutes.

What the experts say

"Welcome to the era of VibeScams, where creating a phishing site takes just a few clicks. Anyone with an internet connection (can) replicate trusted brands with alarming fidelity." - Gen Blog, 2025

Jan Rubín, Threat Research Team Lead

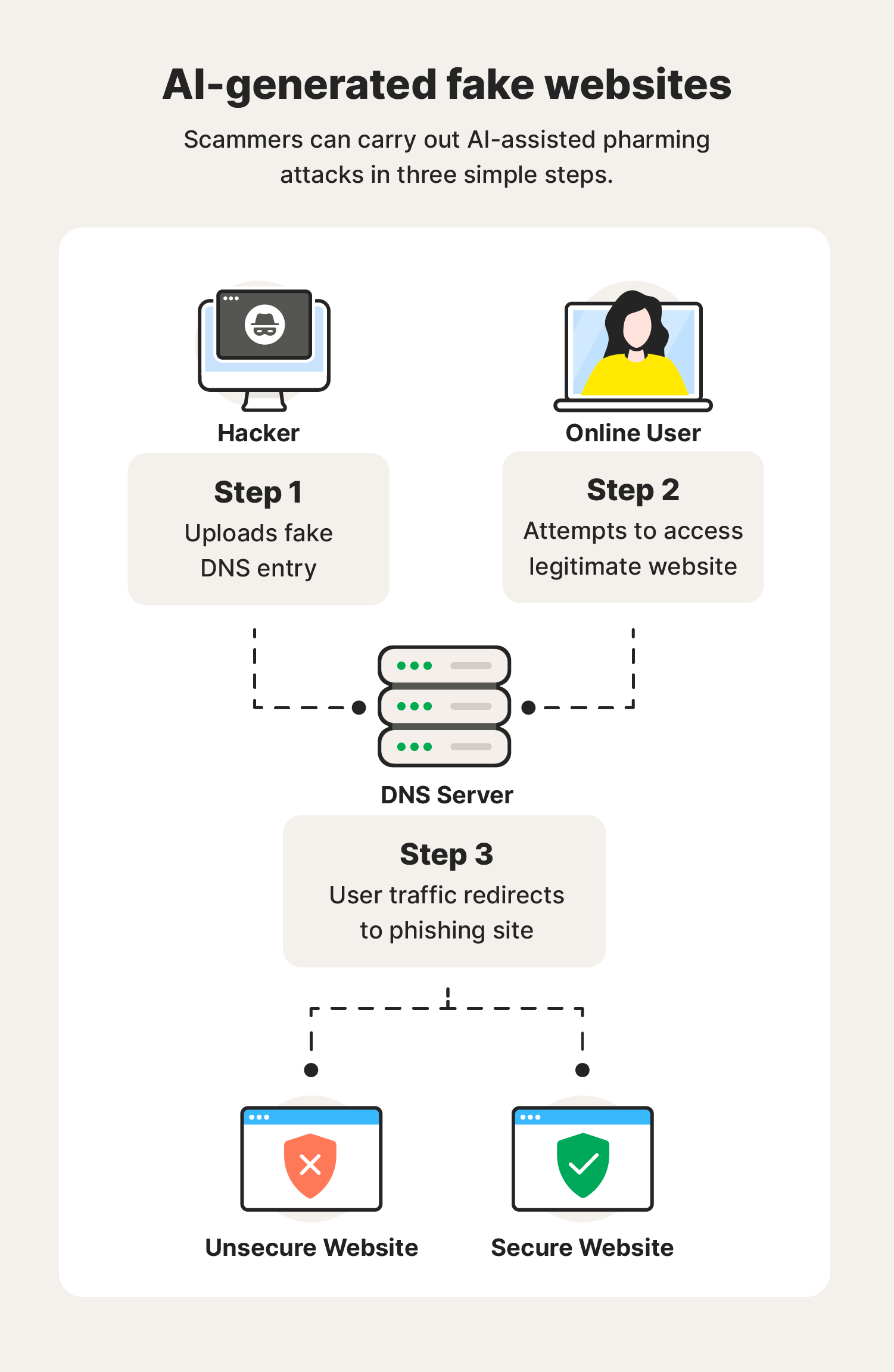

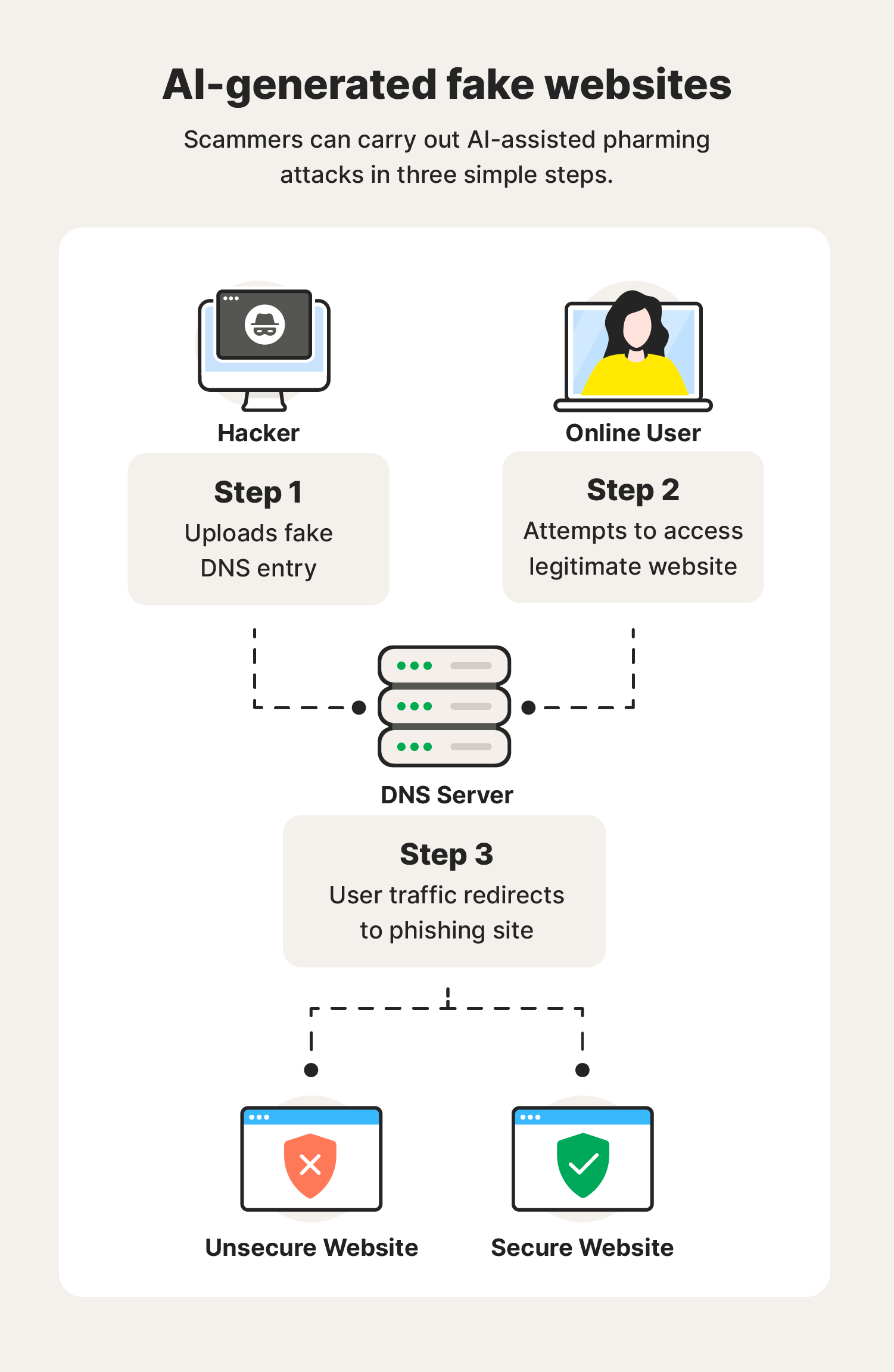

Scammers lure victims to these AI-created dupe websites through sophisticated pharming attacks, where they input their personal information or financial info. Victims think they are buying a product or logging into their account, but in reality, they’re sending their info directly to a scammer.

Scammers then use the input information for financial fraud, identity theft, and phishing attacks. Or, they sell your sensitive data on the dark web.

Banks and online financial services like PayPal are commonly faked by scammers. Always pay close attention to the URL (web address) to check for differences against the real sites.

Always visit websites by entering the address into the search bar (or using your bookmarks). If you see a link to a website on social media or in a message, don't click it. These may direct you to an impostor site.

5. AI-generated romance scams

AI romance scams happen when scammers use AI tools to win the hearts of their victims. Then, they manipulate them into sending money.

Romance scams use AI to generate photos, voice, and video of a person who doesn’t exist (or they can deepfake a real person). Then, scammers set up dating profiles, social media accounts, and more to give the fake person a digital footprint. AI chatbot scammers automate conversations with many victims simultaneously, so they can sit back and relax while the AI does all the seducing.

Recently, a Scottish woman named Nikki MacLeod was duped out of £17,000 in the form of bank transfers, PayPal transactions, and gift cards as she built a relationship with a romantic partner who didn’t actually exist. When she felt skeptical, she was reassured by what she now knows were deepfakes.

Did you know? Generative AI models such as GPT 4.5 have already passed the Turing test, meaning humans can no longer tell whether they are speaking with an AI bot or another human. So, if you’re sure you’d be immune to AI’s romantic advances, think again.

6. AI-generated phishing attacks

Phishing attacks are social engineering schemes where hackers send messages pretending to be someone else, in hopes of collecting your private information.

Before AI, scammers had to write their own phishing messages and reply to their victims. Now, all of that can be automated by AI. Scammers can generate convincing messages in seconds. And, unlike the clunky and error-filled messages of the past, AI sounds genuine and doesn’t make human mistakes, like typos.

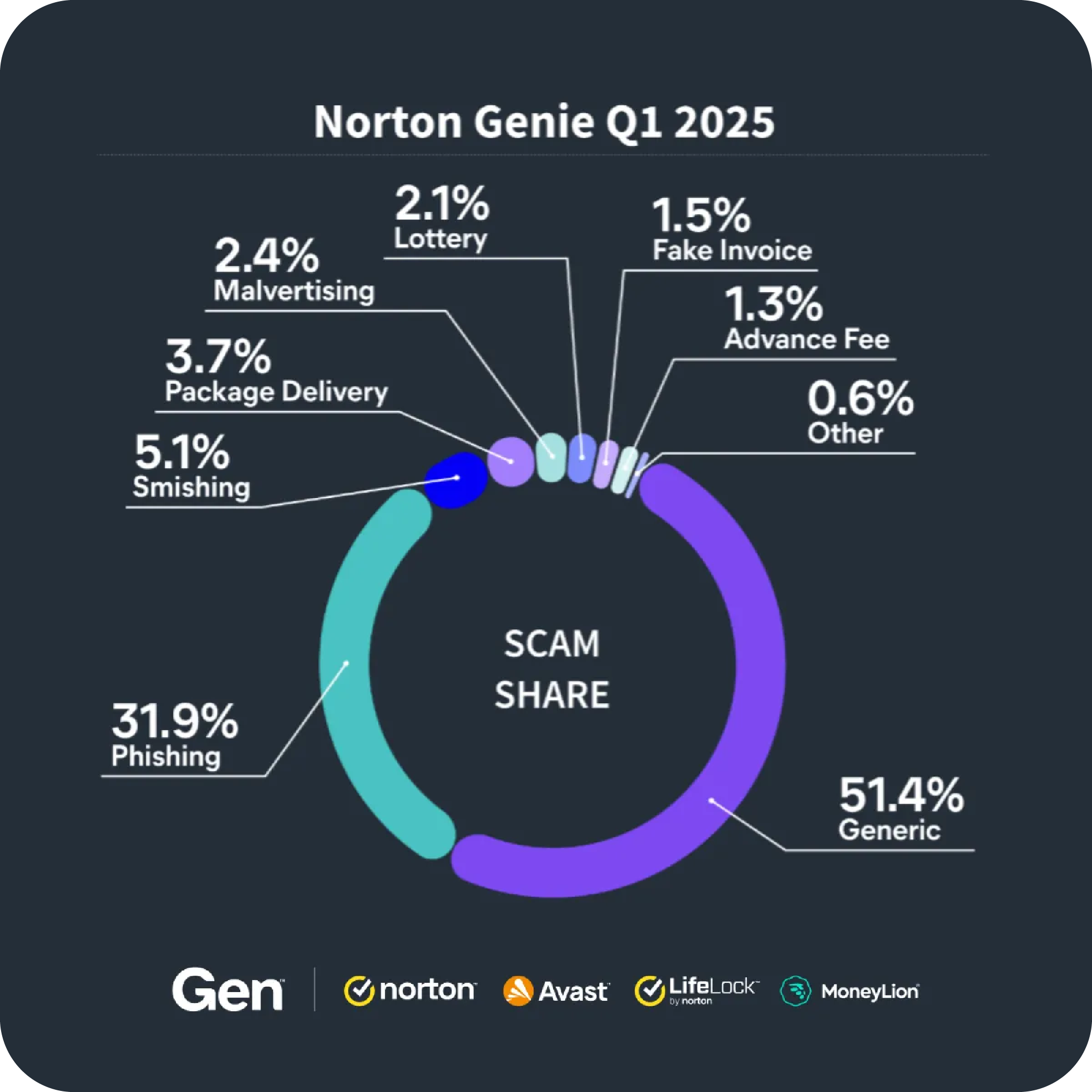

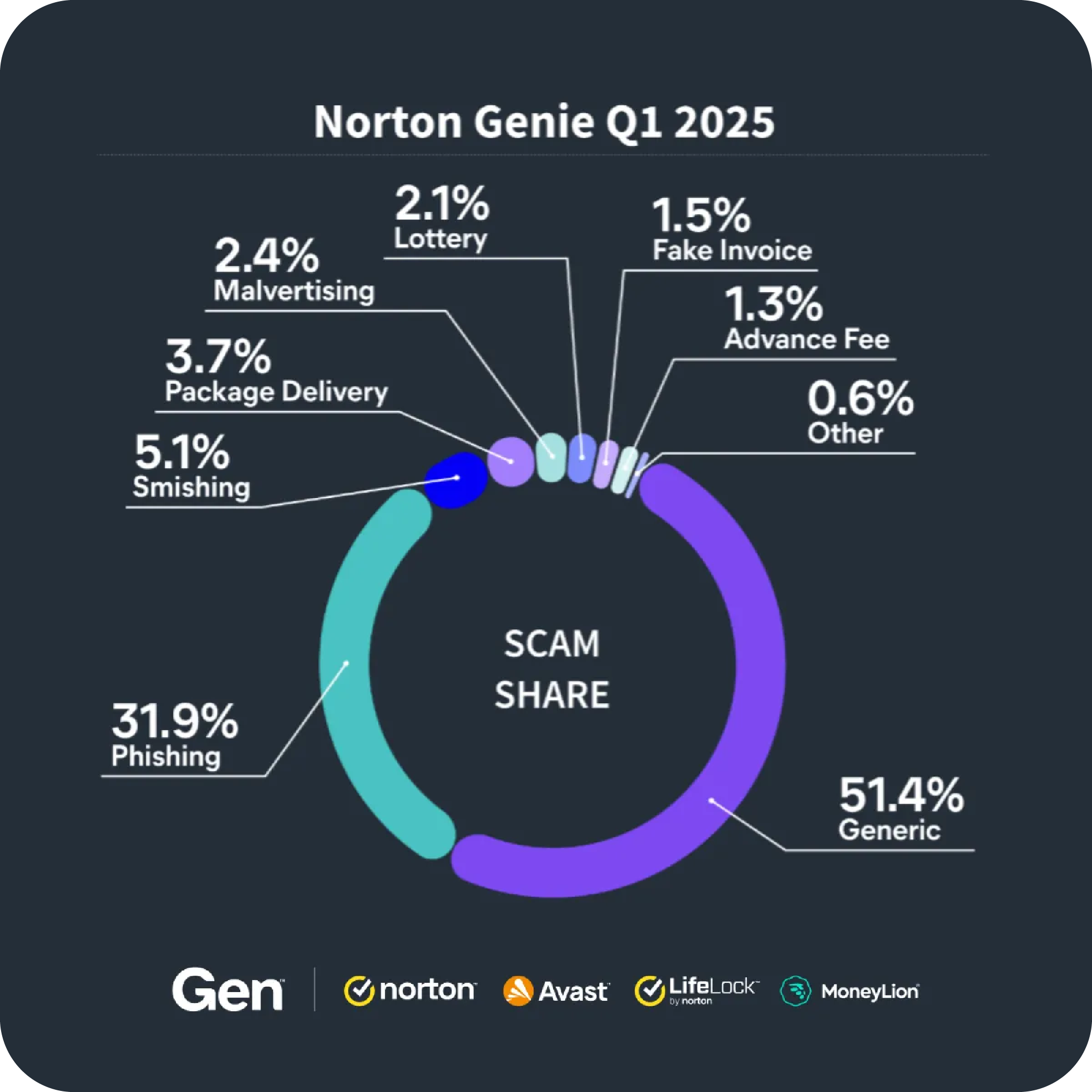

Luckily, new tools, also powered by AI, are helping people and businesses rapidly scan their messages for signs of fraud. AI tools like Norton Genie have helped identify hundreds of thousands of phishing messages, protecting people from AI spam and fraud.

According to the latest Gen Threat Report, which details Cyber Safety trends found in the first few months of 2025, phishing scams account for over 30% of all scams detected by Norton Genie — that’s a 465% increase from the previous quarter.

Source: Gen Threat Report, Q1/2025

7. AI-powered investment scams

AI investment scams trick victims into sending money by promising big returns. Scammers use AI-generated phishing messages and AI chatbots to automate their social engineering tactics. This boost in fraudster efficiency has led to a 24% rise in investment scams since 2023.

Scammers often use deepfakes and voice clones, mimicking prominent names in finance like Elon Musk and Warren Buffett to gain their victims’ trust.

Investing with scammers can seem legitimate. Many investment scam sites are hosted by fake websites or have real representatives on the phone. But once you send your money, you won’t see it again.

To avoid these scams, talk to your bank or financial planner before making any big decisions with your money. Be wary of get-rich-quick promises, especially if they require urgent action or involve cryptocurrency — a favorite of scammers because it’s virtually untraceable. And, never take investment advice from social media posts.

AI scams in the news

Scammers using AI have duped their victims out of hundreds of millions, and their stories have appeared in global media. Here are a few of the biggest AI scams in the news.

The deepfaked conference call

An employee from the UK engineering firm Arup transferred around $25 million USD to criminals after receiving confirmation of the transfer on a video call with top company managers.

As it turned out, the employee wasn’t talking to the managers at all — all of the meeting’s attendees were deepfakes. It was a highly sophisticated deepfake attack, featuring simultaneous live deepfakes of top leadership at a major corporation.

This story demonstrates how deepfakes are targeting the highest levels of major companies. A 2024 Deloitte poll found that over 15% of executives had experienced at least one deepfake incident in their organizations.

Joe Biden AI voice clone

In 2024, scammers tried to sway the US presidential election by robocalling New Hampshire voters and telling them not to vote in the state’s upcoming primary.

The catch was that the call came from an AI impersonation of Joe Biden. It began with a classic Bidenism: “What a bunch of malarkey,” and continued with an urge to stay home from the polls. The AI scam was quickly uncovered, but it shows how easily AI can be used to manipulate elections or other political activity.

Recently, the World Economic Forum ranked AI misinformation as the second most likely risk of “crisis on a global scale.” Many countries have already experienced meddling in their elections by scammers and foreign adversaries.

Taylor Swift deepfake scams fans

Due to her mega-star status, Taylor Swift is among the most deepfaked celebrities. In a recent scam, she appeared in a series of social media ads offering free Le Creuset cookware sets to her fans.

To redeem the offer, participants had to give their personal information and make a small payment for shipping. Of course, the videos were fake — Swift never made such an offer. Scammers made off with the cash, financial info, and personal info of thousands of Swifties.

How to help avoid AI scams

AI scams can be tricky to detect, but you can limit your exposure to risk by following some basic cybersecurity best practices.

- Don’t click suspicious links: Links are how scammers send you to unsafe places online, such as fake websites, and they can even expose your devices to malware. Avoid clicking suspicious links sent via chat, email, or text.

- Avoid clicking links in social media posts: Scammers love to spread malicious links and AI misinformation via social media — especially viral posts that your family and friends might share.

- Be skeptical of unsolicited messages: Unsolicited messages are often from scammers. AI helps them generate and respond to more of these messages than ever. It’s best to avoid replying to these messages.

- Limit sharing personal information online: Don’t share your personal information on social media, messaging apps, AI tools, or anywhere online (besides your trusted financial or government accounts). Scammers use AI to crawl the internet for personal information, which they exploit to commit identity theft.

- Invest in AI scam protection: One of the most effective ways to fight AI cyber threats is with AI cybersecurity. AI scam detection tools identify AI risks and alert you before it’s too late. For example, Norton Genie is over 90% accurate at identifying scams.

- Be wary of urgent or unusual requests: If someone sends you an urgent or threatening request, it may be a scammer trying to manipulate you. Take a step back and wait until you’re thinking clearly before taking action.

- Verify caller/sender identities: Always double-check the identity of a caller or sender when you receive a message requesting personal info or money. Verify their identity by calling them via a different communication channel.

Some AI browsers have built-in tools to help you spot fake AI websites. Consider these or other browser-based tools to help you spot these scams.

What to do if you fall for an AI scam

Around one-third of all AI scams is successful. There’s always a risk of becoming a victim, even if you’ve taken precautions. Here’s what to do if you fall for an AI scam:

- Secure any compromised accounts: Change your passwords or close affected accounts. If they are financial accounts, contact the organization and set up fraud alerts.

- Report the scam to the FTC: Visit reportfraud.ftc.gov to report the scam. This will help federal authorities track the scam and prevent others from falling victim.

- File a police report: Contact your local police department to file a report. Do this immediately, as insurers and banks may require a police report before they’re able to help you with reimbursements and disputed charges.

- Document everything: Keep a journal and document every way that you’re affected by the scam. Print out financial statements and police reports, and screenshot any communication with the scammer. Clear documentation is key to recovering your funds and helping investigators hold the perpetrators accountable.

- Monitor your financial accounts: Keep an eye on your accounts to check for unfamiliar charges or other unusual activity.

- Protect your credit: Contact the main credit reporting agencies (Equifax, Experian, and TransUnion) to set up fraud alerts or freeze your credit. Freezing your credit prevents anyone (including you) from opening lines of credit, such as new credit cards. Fraud alerts require lenders to request verification before extending credit.

- Scan your devices for malware: Use antivirus software to scan your internet-connected devices (phone, tablet, computer, etc.) for malware. This software will locate hidden viruses and other malicious activity happening behind the scenes.

Don’t be fooled by the robots

AI has given scammers a bag of new tricks, from deepfakes to AI-created malware. But you don’t have to fall victim to AI scams. To avoid their traps, learn to identify scammers’ tricks, and get the latest AI cybersecurity protection with Norton 360 Deluxe.

Norton 360 Deluxe helps block hackers, protect your sensitive information, and monitor for AI scams across devices — so you don’t have to become a cyberforensics specialist just to stay safe online.

FAQs

Is ChatGPT safe?

Yes, ChatGPT is generally safe. OpenAI (the makers of ChatGPT) use advanced cybersecurity measures and content moderation to protect users from cyber threats, harmful interactions, and misinformation.

However, users should avoid sharing personal information in conversations with ChatGPT. OpenAI saves conversations for research purposes, so sharing personal info may increase your risk in the event of a data breach.

Is AI a scam?

No, AI is not a scam. AI is a legitimate technology that utilizes machine learning algorithms to recognize patterns in data and predict outcomes. Much like any other technology, the benefits or risks of AI depend on how it’s used.

Doctors are using AI to more accurately detect and prevent cancer. On the other hand, fraudsters misuse AI to create convincing scams, tricking victims out of their money and sensitive data.

Editorial note: Our articles provide educational information for you. Our offerings may not cover or protect against every type of crime, fraud, or threat we write about. Our goal is to increase awareness about Cyber Safety. Please review complete Terms during enrollment or setup. Remember that no one can prevent all identity theft or cybercrime, and that LifeLock does not monitor all transactions at all businesses. The Norton and LifeLock brands are part of Gen Digital Inc.

Want more?

Follow us for all the latest news, tips, and updates.