Is AI dangerous?

Artificial intelligence has the potential to change the world for the better, but in the wrong hands, it can also do harm. So, is AI dangerous? And which AI threats might you be vulnerable to? Learn about some of the main risks of AI. Then, help protect yourself from its dark side with Norton 360 Deluxe.

AI is being integrated into countless industries — from food service to social media. It’s becoming an integral part of our daily lives — whether we understand the risks or not.

When people talk about AI today, they’re usually referring to generative AI (GenAI). This type of AI “learns” by analyzing large data sets and uses its “knowledge” to generate content, such as text and images, in response to user-generated instructions called prompts. It’s the technology that powers large language models (LLMs) such as ChatGPT.

Lots of people use GenAI apps to boost their productivity, fuel their creativity, and provide assistance in their daily lives. However, because AI is such an accessible tool, it can be used perhaps just as easily for harm as it can for good.

So, yes, AI can be dangerous. And, in fact, there are already AI-powered platforms designed specifically to help hackers and scammers perpetrate crimes at scale, known as dark AI tools. AI is also rapidly changing the internet, the economy, and society as a whole in unexpected ways, presenting new and constantly evolving cybersecurity and privacy threats.

The first step to protecting yourself is to learn the dangers of AI. Here are some of the most important potential dangers of AI to know about, so you can browse, interact, and generate more safely.

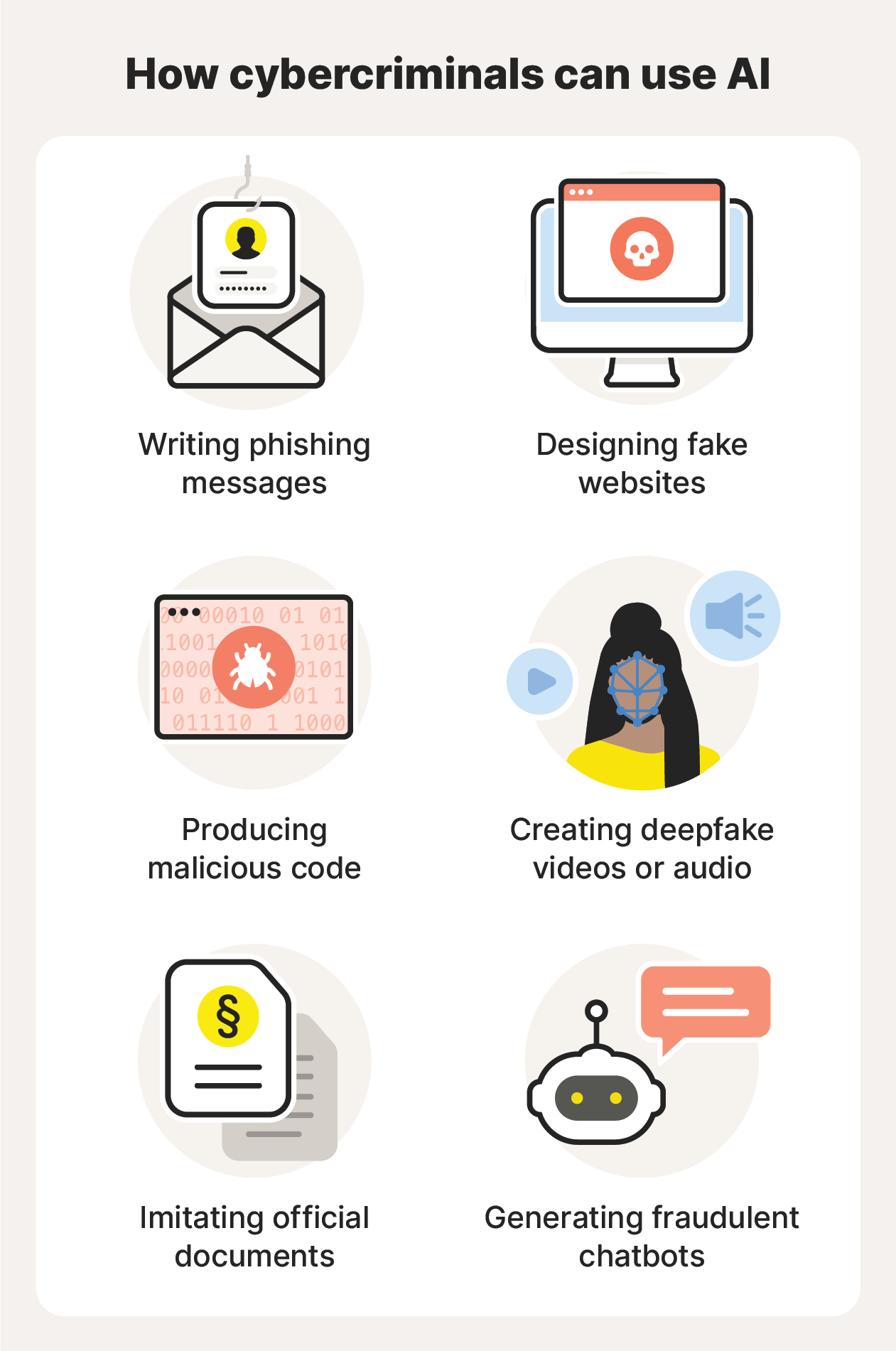

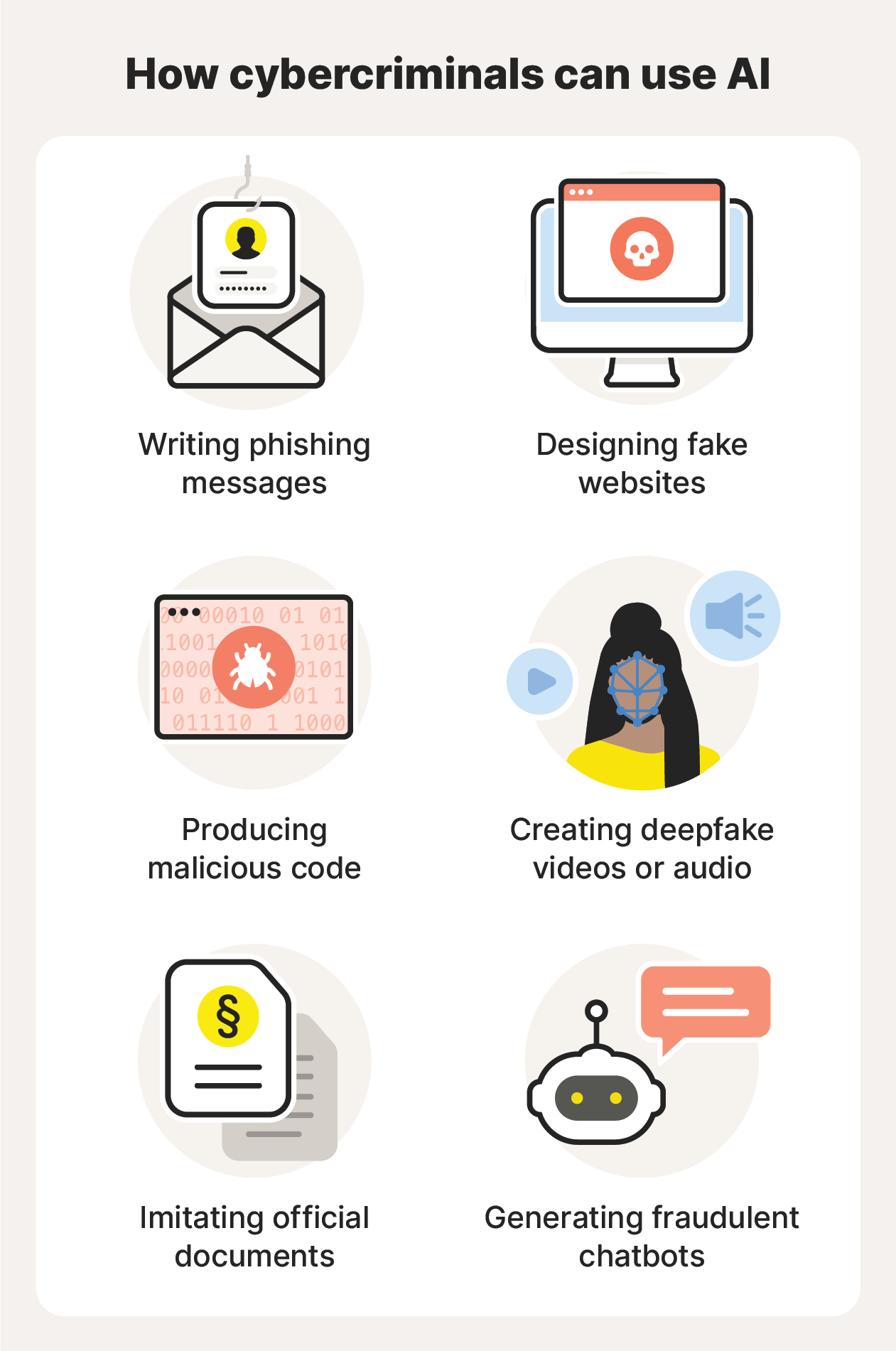

1. Cybersecurity threats

AI is making it easier than ever for cybercriminals to plan and orchestrate attacks. In the past, hackers would need time and training to design attacks or create malware. Today, they can use dark AI programs like FraudGPT to construct elaborate attacks in seconds and automate the entire process — scanning for vulnerabilities, launching social engineering campaigns, and even adapting in real time to evade detection.

AI also enables more sophisticated attacks than ever before, such as convincingly real deepfakes, voice cloning (audio deepfakes), and fake versions of official documents.

AI is increasing the productivity of cybercriminals and putting businesses and individuals at greater risk of AI scams. To help protect yourself, keep up to date with the latest online scams and tricks cybercriminals use, avoid giving personal information to anyone you don’t know or trust, and never click links in suspicious messages.

For an added layer of security against the risk of AI scams and hacking attacks, get Norton 360 Deluxe. You’ll benefit from real–time protection against malware, fake websites, and hackers, as well as advanced AI-powered Scam Protection features that can help you spot sophisticated phishing attacks.

2. Data privacy exposure

AI tools require massive amounts of training data to work effectively. This means the companies behind them need to collect data to create valuable products. Some of this data may come from your conversations with AI and your interactions on social media.

Some companies, such as OpenAI (the creators of ChatGPT), admit to storing chat data and using it to train their AI tools. Other AI applications, such as facial recognition technology, may record your face and personal info without your knowledge. This can increase your risk of sensitive data exposure through data breaches or leaks by expanding the number of places where your info is stored.

Pay close attention to the data collection practices of AI companies — including online platforms that use AI, like search engines, AI browsers, or social media websites — and never provide sensitive information in AI conversations.

3. Job loss

AI is extremely versatile and can perform many tasks more quickly and cost-effectively than humans. AI is taking over jobs today by the tens of thousands, and it’s predicted to take over as many as 300 million human jobs by 2030. AI’s effect on the job market could cause economic hardship and significant societal turmoil.

It’s not easy to predict how AI will impact the future of labor, but experts say that the best way to protect yourself is to embrace AI and learn how to use it in your industry. Humans who can leverage AI to boost their productivity will be the most attractive job candidates.

4. Algorithm biases

AI is only as “intelligent” as the data it’s trained on. When its training data is incomplete or skewed, it may produce biased or false responses that can have dangerous or unfair real-world implications. It could perpetuate these stereotypes when generating text and images, creating a dangerous feedback loop.

For example, an AI used across several U.S. health systems was found to be prioritizing healthier white patients over sicker black patients because it was trained on cost data, not care needs. That’s why it’s critical to take AI output with a grain of salt, fact-check its replies, and think critically about biases it may have.

5. Job screening inequities

One of the most notable examples of AI algorithm bias is demonstrated when it performs job screening tasks. Companies often use AI tools to eliminate unqualified applicants before a human considers the remaining selection. But AI has been found to perpetuate long-standing biases, such as choosing applications with more male-sounding names and eliminating those with names associated with Black people.

This is likely because the AI was trained on data that represents the hiring practices of biased humans. Regardless of the cause, though, without deliberate remedial intervention, AI job screening tools could lead to a less equitable job market, while also hurting businesses by eliminating perfectly qualified candidates.

6. Incorrect outputs

AI is not always correct. One study from 2024 found that generative AI tools could agree with false statements up to 26% of the time. GenAI features incorporated into search tools, like Google’s Gemini, also suffer from high rates of inaccuracy, reportedly citing false or incorrect sources around 60% of the time.

Despite this, AI-generated content is already influencing news reporting, business strategies, and legal cases. This is raising the risk of widespread misinformation and serious errors across industries. The easiest way to prevent AI inaccuracies is to fact-check its output and claims against reputable sources.

7. Environmental harm

The technology that powers generative AI programs consumes a massive amount of energy, which could lead to increased CO2 emissions and associated negative environmental impact.

It’s estimated that a single query in ChatGPT consumes about as much electricity as powering a light bulb for 20 minutes, and the total electricity consumption of data centers is soon expected to exceed that of entire nations. AI brands are searching for ways to reduce their environmental impact, but users can help by using AI only when necessary.

8. Copyright infringement

Generative AI’s “intelligence” is solely a product of its training, and the data it trains on is entirely made up of existing information created by real humans — sourced from websites like Wikipedia, forums like Reddit, academic papers, and a vast collection of digitized books.

However, the creators of content used in training data often go uncompensated and unacknowledged as sources in responses — even if the content is copyrighted.

AI companies gobble up all the data they can find when training their AI models, but they insist that if they had to pay for it, they’d go out of business. It’s unlikely that humans will be able to protect their intellectual property from AI, so AI will continue to use and imitate human writing, art, music, and more.

9. Physical harm

AI isn’t just writing our emails — it’s also increasingly fighting our wars. AI is bringing automation to warfare, with autonomous weapons like drones being used in conflicts around the world. Some believe that this may make it easier for countries to engage in warfare, with AI fueling the initiation or escalation of conflict.

10. Lack of transparency

Most AI companies are not required to share information about their data collection, algorithms, or carbon emissions. This makes it difficult to understand the risks regarding users’ data security or the implications of using AI. Some companies, such as OpenAI, are somewhat open about how they collect user data. But many other AI tools, especially third-party AI apps, are not as transparent.

It’s important to understand how your data is being used and who has access to it before using an AI tool. Review privacy policies to check your data is secure and not being sold to third parties or advertisers.

Identify AI scams

AI is helping humans solve problems and make progress in meaningful ways, but it’s also creating brand new risks. Perhaps most pressingly, it’s providing cybercriminals with the means to carry out sophisticated attacks more quickly and at lower cost, while also infiltrating our knowledge sources with misinformation.

While you can’t completely avoid AI risks, you can help protect yourself by staying informed and using the latest cybersecurity software. Help protect your data and devices with Norton 360 Deluxe, which includes powerful scam detection features, including a built-in AI-powered assistant that can even help you spot deepfake videos. Let our advanced AI tools help keep you safe from sophisticated text and web scams, wherever you go.

FAQs

Can AI become self-aware?

While, theoretically, a future iteration of AI could become self-aware, its current form cannot. Generative AI tools work like complex calculators, analyzing a prompt and generating an “effective” response by assessing statistically similar content in their training data. While AI responses may seem intelligent, they’re simply the products of calculations and show no signs of self-awareness.

Can AI take over the world?

No, AI can’t take over the world in its current state. Generative AI tools like ChatGPT are built for specific, narrow purposes, and they are unable to think, plan, or make complex choices.

Is it dangerous to talk to AI?

Talking to AI can be dangerous if you aren’t aware of the risks. Most mainstream AI tools have safeguards in place that help prevent hate speech, misinformation, and other malicious interactions. However, some dark AI tools remove these restrictions and could lead to harmful conversations.

Talking to AI can also be dangerous if you don’t know how your data is being used. AI tools can record your conversations and use your data for training purposes. Third-party tools may sell your chat info to advertisers or use it to scam you. Always use trusted AI tools with transparent data collection practices, and avoid giving personal information away in conversations.

Editorial note: Our articles provide educational information for you. Our offerings may not cover or protect against every type of crime, fraud, or threat we write about. Our goal is to increase awareness about Cyber Safety. Please review complete Terms during enrollment or setup. Remember that no one can prevent all identity theft or cybercrime, and that LifeLock does not monitor all transactions at all businesses. The Norton and LifeLock brands are part of Gen Digital Inc.

Want more?

Follow us for all the latest news, tips, and updates.