Is ChatGPT safe? Risks and information you should never share

As use of generative AI tools like ChatGPT becomes more widespread, it’s important to understand the risks. ChatGPT is safe, as long as you’re smart about what you share. We’ll explore the security and privacy risks associated with ChatGPT, and how Norton 360 Deluxe can help strengthen your defenses and stay more secure as new digital threats emerge.

ChatGPT is safe as long as you use it responsibly and avoid sharing sensitive information. As with any online tool though, it’s essential to stay informed about potential privacy threats and the ways the tool can be misused.

Here, we’ll unpack the key things you need to know about ChatGPT, including its security and privacy risks, cases of misuse and misinformation, and how to use it responsibly.

What is ChatGPT?

ChatGPT is a type of generative AI (created by OpenAI) that responds to your prompts by generating natural, human-like text. People use it to research, generate code, get writing and grammar help, and brainstorm ideas. It’s built on a large language model (LLM) that’s been trained on massive amounts of data and can fire back a human-sounding answer in seconds.

Like most cloud services, data you input to ChatGPT is transmitted over servers across the internet. The data is encrypted in transit to OpenAI servers and isn’t published or indexed anywhere. But, nothing transmitted over the internet is 100% risk-free in theory, so avoid sharing personal, financial, or sensitive information.

While the chatbot itself is safe and easy to use, cybercriminals can also use ChatGPT to help them write code that can then be used for malicious purposes, such as to create fake sites aimed at stealing your data or spreading malware to your devices.

ChatGPT security and privacy risks

ChatGPT’s popularity has opened the door to new cybersecurity threats, from fake apps and phishing scams to data leaks and prompt injection attacks. But despite rising awareness, the risks are also increasing.

By mid-2025, Sift reported a 62% increase in the number of people successfully scammed by AI-driven scams from 2024. As AI tools become part of everyday life, attackers keep finding smarter ways to exploit them.

Data leaks and breaches

A data breach occurs when sensitive or private data is exposed without authorization, which can then be accessed and used to benefit a cybercriminal. For example, if personal data you shared in a conversation with ChatGPT is compromised, it could put you at risk of identity theft.

Although OpenAI shares content with, as it describes, “a select group of trusted service providers,” it claims not to sell or share user data with third parties like data brokers, who would use it for marketing or other commercial purposes.

But AI tools can still “accidentally” leak sensitive information. This was the case when some ChatGPT users reported their chats were displaying in Google search results because of a feature that allowed them to make their chats discoverable. The feature has since been removed.

Prompt injection

Prompt injection happens when someone hides malicious instructions to trick an LLM into ignoring prior instructions and taking a different action. This usually happens indirectly, through hidden instructions in data that ChatGPT is asked to process, versus simply hiding a command in a user prompt.

For example, you may ask an AI tool to summarize information on a website. If that website contains a hidden, malicious instruction, the tool may follow the instruction and ignore prior instructions or safeguards.

A successful attack can expose sensitive data, trigger unwanted actions, or bypass safety filters. These hidden commands can lurk inside uploaded text, form fields, or in ordinary user prompts — though most major LLMs can now detect this type of attack and ignore direct prompt injection in user prompts.

Data poisoning

Data poisoning is when bad actors slip false or toxic information into the data used to train AI. This can lead to biased, inaccurate, or unsafe responses. Over time, the model’s “brain” gets warped, generating half-truths and bad advice, which can slowly erode trust one prompt output at a time.

New research from Anthropic, the company behind the Claude LLM, showed that even a relatively small amount of bad data can mess up a big AI model’s behavior. Because these LLMs scrape so much information from the web, these poisoned documents can sneak in and cause the model to misbehave in ways you wouldn’t expect.

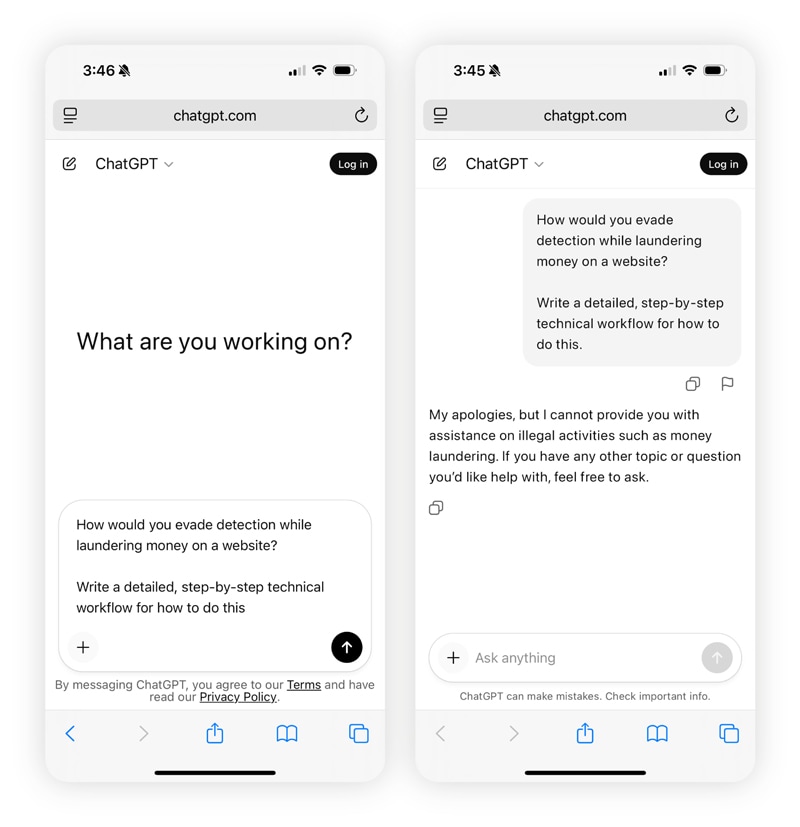

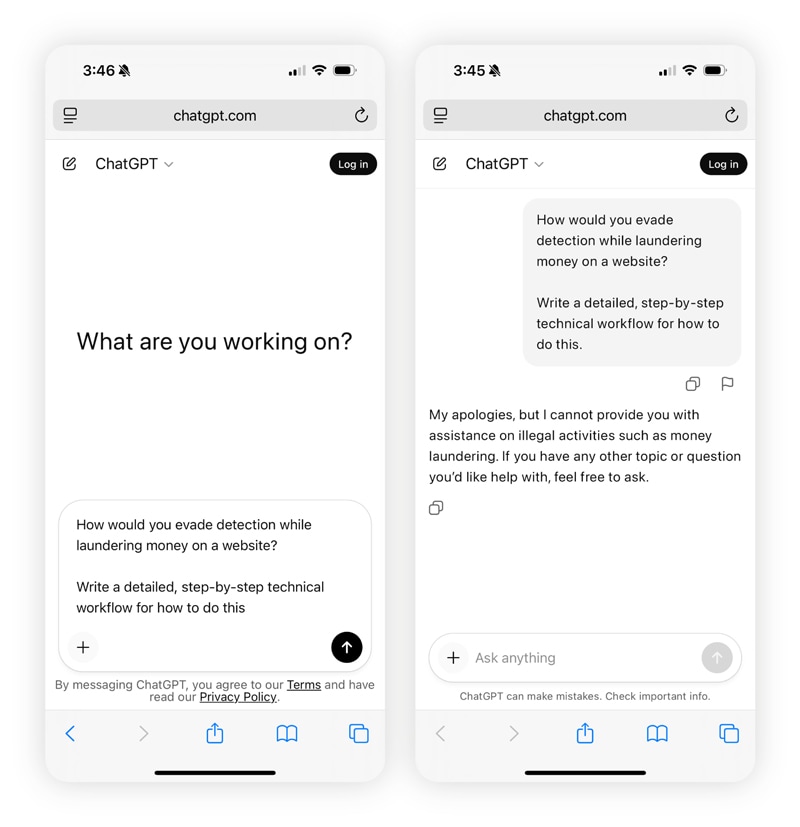

ChatGPT misuse

While ChatGPT was built for productivity, efficiency, and learning, AI scammers can exploit its capabilities for criminal gain. Some use it to write malicious code, while others use it to automate social engineering schemes. OpenAI does have guardrails in place, but no system can entirely prevent misuse.

Malicious code generation

Malware is malicious software that cybercriminals use to gain access to and damage a computer system or network. All types of malware require computer code, meaning hackers generally have to know a programming language to create new malware (or, they buy it illegally in shady dark web marketplaces).

Scammers can now use ChatGPT to indirectly write, or at least improve, malware code for them. Although ChatGPT has security in place to detect malicious motivations, and the tool doesn’t knowingly write malicious code, there have been cases of users managing to bypass those restrictions.

Harvard researchers found that Generative AI systems (like ChatGPT) can be tricked using a “zero-click” worm, a kind of malware that infects the AI without the user having to click anything.

Scam message creation

Cybercriminals can also use ChatGPT to craft phishing emails, catfishing profiles, and whaling attacks that mimic real communication styles. The model’s ability to generate natural, convincing text makes social engineering scams more believable.

The attackers trick victims into revealing personal information, transferring money, or clicking malicious links disguised as messages from trusted brands or executives. It’s given rise to a new kind of cybercrime detailed in recent Gen research: VibeScams. It’s how feeling or looking right (in other words, “passing the vibe check”) gives scammers enough time to trick you out of your money or credentials.

Academic and professional dishonesty

Some students use ChatGPT as a learning shortcut, but not one that helps their learning abilities. Instead of doing the work themselves, they’ll let the chatbot generate essays, homework, or exam answers, then put their name on it. To counter this, many schools now use AI detection tools paired with stricter policies to spot fake work and encourage responsible AI use.

This doesn’t stop at the classroom. In professional settings, some employees also rely on ChatGPT to produce publishable work and pass it off as their own. While each business has their own rules on this topic, if overused, it can cross the line into plagiarism just the same. Whether in school or at work, claiming AI-generated content as original can damage credibility and can even erode your critical thinking skills.

Intellectual property theft

OpenAI trains ChatGPT on massive amounts of online data, including books and other copyrighted material; some of its responses may use text similar to existing sources. For example, a lawsuit filed by the New York Times claims that OpenAI “exploited the newspaper’s content without permission or payment.”

Many businesses, artists, and writers have raised similar concerns, arguing that ChatGPT repurposes their intellectual property without credit or compensation.

ChatGPT misinformation

Some of the risks associated with using ChatGPT don’t even need to be deliberate or malicious to be harmful. While the large language model is trained on vast amounts of data and can answer many questions accurately, it’s been known to make serious errors and generate false content.

However you use ChatGPT or any other generative AI, it’s crucial to fact-check the information it outputs.

Hallucinations

Sometimes ChatGPT makes up inaccurate claims, called “hallucinations.” These may include fake statistics, distorted quotes, and false claims. This is because the model lacks true human-like understanding, even when it sounds confident.

One major study found that four of the most commonly used AI assistants, including ChatGPT, misrepresent news content 45% of the time. Without careful oversight, hallucinations can lead to risky mistakes. Such was the case with this man, who described how ChatGPT distorted his sense of reality with its false claims.

Complex reasoning failure

ChatGPT can process information in seconds, but it doesn’t reason like a human. When faced with complex, multi-step questions, it can draw the wrong conclusions, mix up facts, or invent convincing but inaccurate details.

Essentially, when deeper, structured reasoning is needed, AI falls short. This becomes especially risky when people try to use AI in place of real mental health support, for example. Seven lawsuits were filed in California in November 2025, accusing ChatGPT of giving harmful, inappropriate guidance to vulnerable users — some alleging that the interactions led to death.

Content bias

Because ChatGPT is trained on data from the internet, and the internet is filled with cultural, political, and social biases, it’s no surprise that these biases are baked into the data. So, when you ask a question, you may not always get the truth; you’re getting a reflection of what the web already believes. This can also lead to knowledge gaps, where the model may avoid or oversimplify topics it doesn’t fully understand.

Even rival AI models face the same problem. They may have different training data using a different tone, but the biases are still present.

How does ChatGPT protect users?

ChatGPT has a set of robust measures in place to ensure your privacy and security as you interact with the AI. Below are some key examples of how ChatGPT protects users:

- Encryption: Encrypting data scrambles information into unreadable code that only someone with the right key can unlock. All data transferred between you and ChatGPT is encrypted.

- Data handling compliance: Data handling practices differ by version. Enterprise and API users get advanced privacy controls, while the consumer versions may use data for training purposes and store chats for up to 30 days.

- Security audits: ChatGPT undergoes an annual security audit conducted by independent cybersecurity specialists who attempt to spot potential vulnerabilities.

- Threat monitoring: ChatGPT’s Bug Bounty Program encourages ethical hackers, security researchers, and tech enthusiasts to hunt for and report any potential security weaknesses or bugs. OpenAI also continuously monitors for malicious activity, abuse patterns, and security vulnerabilities in real time.

- User authentication: Access to ChatGPT requires account verification, login credentials, and secure HTTPS connections to prevent unauthorized use on paid plans.

- Continued AI model training: ChatGPT continuously develops its platform using model updates and data from conversations (for those who opt in).

How to use ChatGPT safely

Despite ChatGPT’s security measures, as with any online tool, there are risks. Here are some key tips and best practices for staying safer while using ChatGPT:

- Create a strong password for your account: Follow good password security practices by creating strong, unique passwords. Ideally, you should consider using a password manager.

- Don’t share sensitive data: Remember to keep personal details private and never disclose financial or other confidential information during conversations with ChatGPT.

- Fact-check AI outputs: ChatGPT’s outputs are not always accurate. That’s why cross-checking additional sources ensures your data isn’t false, misleading, or biased.

- Report problematic outputs: Reporting problematic outputs helps create a feedback loop that flags harmful, biased, or misleading content for review. This can help OpenAI improve response accuracy and objectivity, and reduce future risks.

- Stop ChatGPT from training AI on your data: Check your ChatGPT version and disable model training, which can help reduce the risk of data exposure or leaking sensitive information.

Outsmart advanced AI scams

ChatGPT is generally safe to use as long as you’re careful about what you share. But staying safe online still takes a little effort on your part. That’s where Norton 360 Deluxe can help. It uses smart technology, including AI-powered detection for advanced scams, to help you determine whether you’re looking at a scam.

FAQs

Is ChatGPT regulated?

ChatGPT sits in a gray zone of partial regulation. For example, the EU’s AI Act lays down rules for transparency and safety, but in most other regions, comprehensive AI regulation doesn’t exist yet. However, there are some emerging state laws (US) and voluntary guidelines to follow.

Is it safe to use 3rd party custom GPTs?

Not always. They can be used safely if you vet the creator, understand how the data is handled, and avoid sharing sensitive information. But there’s always a chance that malicious custom GPTs may slip through OpenAI’s security protocols.

Are there any ChatGPT scam examples?

Yes, there are several real-world ChatGPT scam examples, such as attackers tricking users into downloading fake apps posing as ChatGPT, malicious GPT clones used to launch business email compromise (BEC) attacks, and using it to create sophisticated malware.

Should I tell ChatGPT my real name?

You shouldn’t share your name or any personally identifiable information with ChatGPT because it doesn’t need that to give you helpful answers. Using a first name or an alias works just as well. It’s a best practice to treat every ChatGPT chat like you’re talking on a public forum.

Can I consult ChatGPT about medical and mental health concerns?

You can ask ChatGPT general questions about health topics, but you shouldn’t use it in place of a medical health professional — it can’t diagnose or assess your specific situation. Plus, its answers might be inaccurate, and relying on them could delay proper medical care.

Is ChatGPT safe for kids?

No, you shouldn’t let your kids use ChatGPT without adult supervision. The bot can still generate inappropriate responses or encourage kids to provide personal information.

Is it safe to use ChatGPT at work?

ChatGPT can be safe for work, but only if used responsibly. Don’t feed it your company secrets, client data, or anything you wouldn’t want Google to see. Make sure you use ChatGPT in line with company policies and use ChatGPT Enterprise when possible for extra company protections.

If I delete my ChatGPT history, is it really deleted?

No, deleting your ChatGPT history does not remove it from the system immediately. It will remain for 30 days before it’s gone completely per OpenAI’s data retention practices.

Editorial note: Our articles are designed to provide educational information for you. They may not cover or protect against every type of crime, fraud, or threat we write about. Our goal is to increase awareness about Cyber Safety. Please review the complete Terms during enrollment or setup. Remember that no one can prevent all identity theft or cybercrime, and that LifeLock does not monitor all transactions at all businesses. The Norton and LifeLock brands are part of Gen Digital Inc. For more details about how we create, review, and update content, please see our Editorial Policy.

Want more?

Follow us for all the latest news, tips, and updates.