Is Discord safe? A privacy and safety guide for parents

At just 13 years old, your child could create a Discord account and enter online spaces with strangers. If you’re a concerned parent, you aren’t alone in wondering if Discord is safe. Learn more about the social media platform, and install parental control software to help protect your kids from inappropriate content online.

Online social media platforms like Discord come with major potential risks for kids. One mother learned this firsthand when she discovered her 16-year-old daughter had been sending a stranger on Discord intimate selfies, photos of her friends, and pictures of the front of her house.

So, what actions is Discord taking to protect this from happening to other children? How can parents protect their children from these online risks? Read on to learn more about Discord and how to use it more safely.

What is Discord?

Discord is a free social media platform that allows users to communicate via text, video, and voice. Users create profiles, join private or public servers (similar to chat rooms), and discuss topics that interest them with like-minded individuals.

Launched in 2015 and initially gaining popularity mostly among gamers, the service has expanded to over 200 million users, becoming a hub for various online communities. Discord feels a bit like a fun hybrid of Slack, Zoom, Reddit, and early AOL chat rooms.

Discord connects people globally, offering spaces to chat about shared interests — whether it’s Marvel movies, knitting patterns, or their high school drama teacher’s meltdown during rehearsal. Public servers can be massive, like the Midjourney server with over 20 million members, while private servers might include just a handful of close friends.

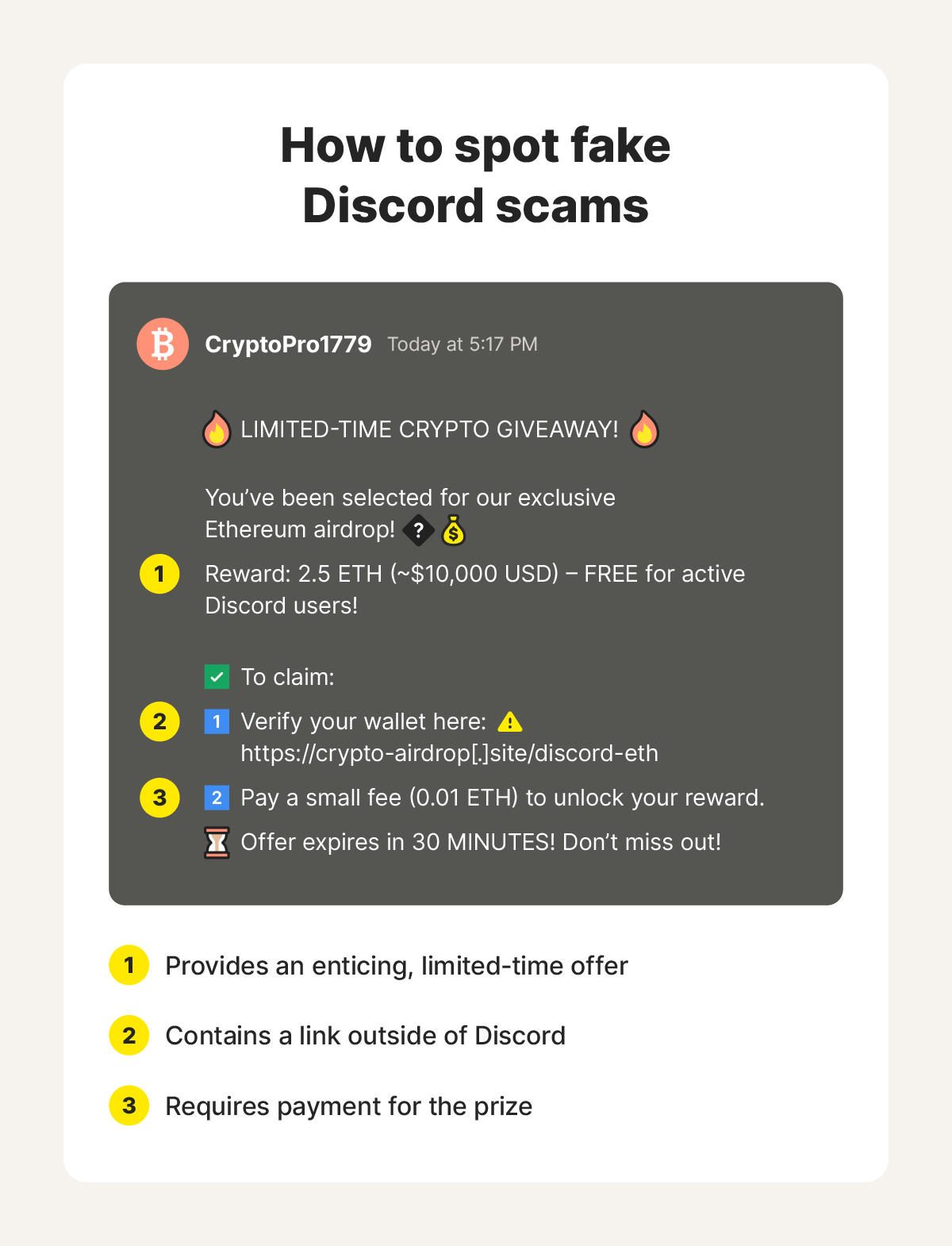

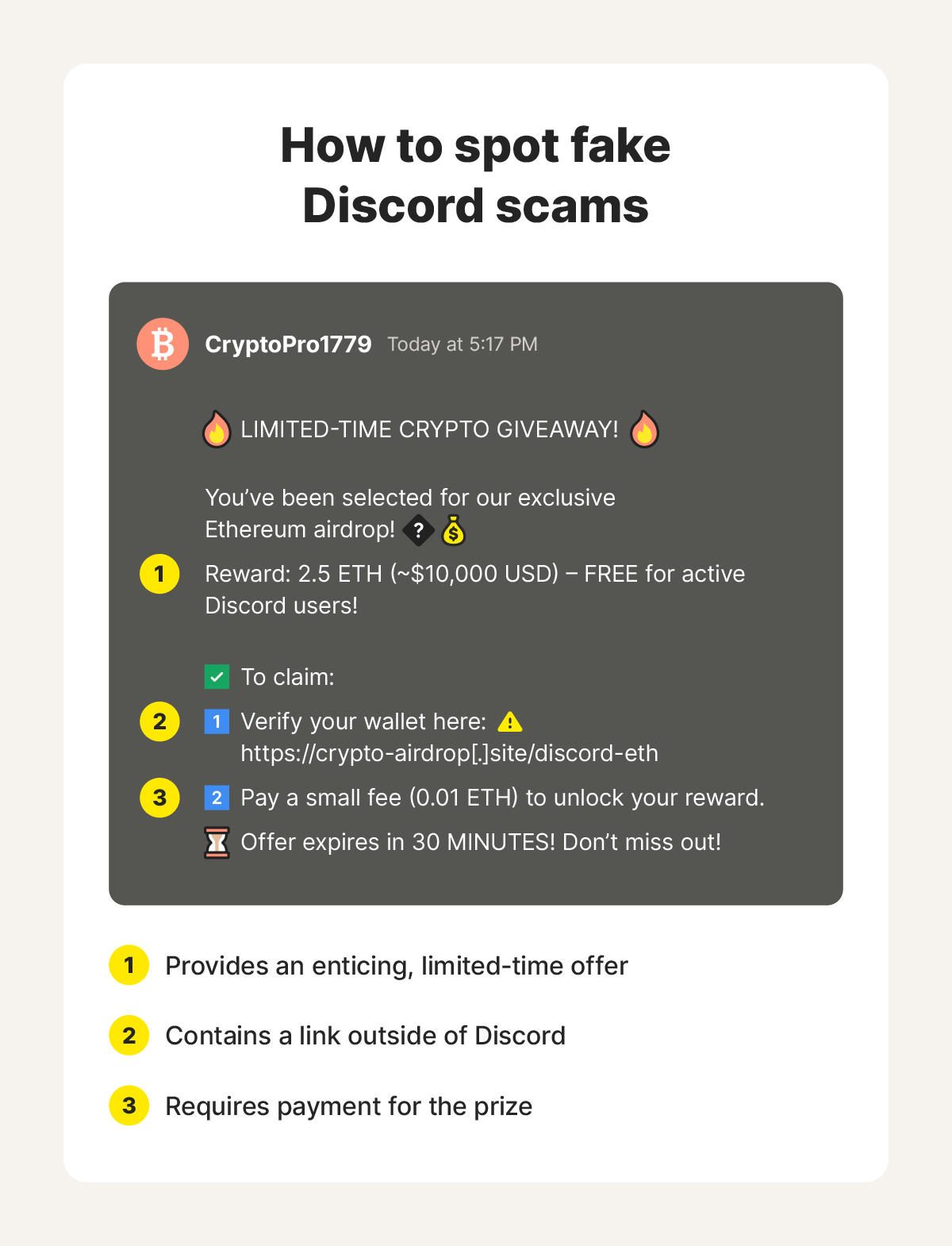

While many use Discord mostly for fun, it can also be used in the workplace for team collaboration, and it offers integrations with apps like Asana, Zapier, and Webhook. And, as with many social platforms, Discord isn’t without risks. It’s also a known hub for social media scammers, who use the platform to impersonate trusted users, promote fake giveaways, or exploit personal information.

How Discord works

Discord works by connecting users through shared spaces called “servers,” built around topics, communities, or friend groups. After creating an account, users can join public servers via Discord’s server directory or third-party lists, or access private servers through invite links often shared on Reddit, YouTube, or Twitch.

Alternatively, users can create their own server and set up voice and text channels within it. These channels help keep conversations focused by topic, whether it’s casual chat, announcements, or project collaboration.

Owners who manage servers can appoint moderators, who control what users share. For example, a moderator could ban someone for cyberbullying or simply annoying other members.

Individual server owners set house rules, but their approach to moderation varies: some are very strict and family-friendly, while others are more hands-off. Discord encourages server owners to include restrictions against doxxing (exposing personal information to the public), spamming, phishing, and child or revenge pornography. This is similar to how Reddit keeps its platform safe.

Verified servers, or those you can find on Discord’s public discovery search, tend to have stronger rules. These servers must abide by Discord’s Partnership Code of Conduct.

As an example, here’s a list of some rules on the Minecraft Faithful texture pack Discord server:

- Avoid being rude or annoying, which may include repeatedly bugging people or using degrading jokes.

- Don’t joke about sensitive topics, such as past tragedies.

- Don’t send loud voice messages or bright and flashy visuals that may induce seizures.

- Stick to the channel topic and avoid using chat commands unless necessary.

Unverified servers may not have the same moderation standards. Due to limited enforcement, members of these servers may have free rein to discuss offensive topics, troll people, share inappropriate pictures, or distribute self-harm material.

What are Discord’s age requirements?

In most countries, including the United States, you must be 13 years or older to use Discord. However, in some jurisdictions, such as the European Union and South Korea, local data protection laws require users to be 14 to 16 years old.

Despite these age limits, children can easily lie about their age when creating an account. To combat this, Discord is testing user age verification when they attempt to change their content filtering settings, although so far these tests are limited to the UK and Australia.

In the U.S., parents must take their own precautions to manage and restrict access. One option is installing parental controls, such as those included in Norton Family, on your children’s devices.

Norton Family can block social media pages and apps, like Discord, to protect your child from inappropriate content. It provides parents with key insights into their kids’ online behavior, helping them stay safe as they learn to navigate the digital world. Norton Family also lets parents limit screen time, ensuring kids learns healthy online habits.

What are the risks for kids using Discord?

On Discord, your kids could be exposed to a variety of risks, including inappropriate content, online bullying, and harassment. Concerns have also been raised about Discord’s privacy practices, including data collection and security.

Here is a closer look at the risks of using Discord:

- Inappropriate content: Despite Discord’s policies regarding adult content, unverified servers and direct messages may have weak moderation. The State of New Jersey is even suing Discord, claiming the company hasn’t taken enough steps to prevent minors from accessing explicit content and interacting with potential predators.

- Cyberbullying and harassment: Children could be exposed to name-calling, hurtful words, and discrimination on Discord. In fact, nearly 20,000 accounts were disabled on Discord due to harassment and bullying, according to a recent Transparency Report released by the platform.

- Privacy and data security: Discord’s privacy policy acknowledges that it collects data on users' accounts, messages, and activity across the platform, which may put users at risk. In one potential incident, Spy.pet, a private surveillance site, claims to have scraped data from almost 620 million Discord users — with the stated intent to sell that information.

- Malware: Cybercriminals can also create Discord accounts to spread malware. For example, in January 2025, an info-stealing Trojan capable of hijacking 2FA backup codes was being disseminated on Discord.

- Contact with strangers and predators: Children who join servers outside their friend group could communicate with strangers and online predators, who could then persuade them to move to even more loosely moderated platforms like Telegram or WhatsApp. One Texas-based lawsuit claims Discord and Roblox failed to protect a young girl from online grooming.

- Live video: Discord users can create live video channels that are open to others, including minors. This may expose children to inappropriate content or lead them to inadvertently reveal what they shouldn’t to cyberstalkers.

- Online scams and phishing: Online scammers can create Discord accounts and join servers to launch phishing attacks. They may fool users into clicking dangerous links or falling for romance scams, pig butchering scams, or other online scams.

Some of these risks are inherent to most social media platforms. For example, Snapchat, which has a similar minimum age limit of 13, has also been called out for failing to combat cyberbullying and stalking. Fortunately, Discord has several safeguards that could help counteract the risks.

How does Discord protect children?

Discord helps to protect children by encouraging server owners to moderate content, employing an online safety team, and enabling some restrictions for account holders under 18 through the Discord Family Center. The company also relies on AI to detect abuse, scams, and phishing attempts.

Here are some more ways Discord takes action to protect its kids:

- Content moderation: Discord bans content that encourages inappropriate interactions with minors, such as sexual content or harassment. Users can report violations to server moderators and Discord’s Safety Team.

- Blocking and reporting features: Discord’s Safety team processes reports on inappropriate behavior, such as threats of violence and harassment. Discord may suspend or ban offending accounts depending on the severity.

- Discord Family Center: Discord offers a free family center that provides information on a child’s activity, such as messaging, server participation, and new friends, similar to iPhone parental controls. This analysis provides parents with information to discuss safe online behaviors, such as talking with strangers.

- Privacy and safety settings: Parents can control their children’s accounts by filtering who can send them direct messages or friend requests. The Keep Me Safe toggle automatically scans direct messages for explicit content, blocking them using automated, AI-driven systems.

- Zero-tolerance policy: Discord has a zero-tolerance policy for child sexual abuse material and grooming behaviors. Any attempts to manipulate or sexualize results in permanent bans from the platform, similar to Fortnite.

- 18+ servers: Discord hosts age-restricted servers requiring users to be 18 years or older to access them. These servers block users aged 13 to 17 — but only if their account info reflects their true age.

Discord has safety measures, but your involvement as a parent is key. Familiarize yourself with Discord’s features and talk to your kids about staying safe online. Together, you and your kids can navigate the platform responsibly and stay safer from phishing, online predators, and malware.

How can you protect your kids on Discord?

You can protect your kids on Discord by educating them about online risks, limiting their screen time, and discussing any suspicious activity you see on Discord’s family monitor. You should also report suspicious users who attempt to message your child with inappropriate or harassing content.

Here’s a list of actions you can take to protect your child on Discord:

- Discuss monitored activity: Discord’s Family Center lets you monitor your kids’ activity on the platform. You could ask for more information about a new friend they’ve added online to learn who it is and if they know them in real life.

- Educate your teen: Have a frank conversation about online risks when communicating with people online. Talk about real news stories where children have been targeted on Discord, reminding them that online strangers can pretend to be minors to solicit inappropriate images or information.

- Research servers: Learn more about the servers your kid frequents by looking up server details on sites like Reddit or joining the server yourself. Teach them to avoid servers with limited moderation or inappropriate content you don’t want your child exposed to.

- Use a VPN: You can hide some device-level data from Discord with a VPN that conceals your IP address. Cybercriminals can use information from your IP to track you, and they could potentially scrape this data from Discord.

- Set screen time limits: Restrict when your child can use the app, allowing access only during approved times and under your supervision. This helps ensure they balance safe online chats with other healthy, offline activities.

- Report suspicious users: Reporting suspicious users who violate a server’s house rules may get them blocked. This limits the potential risk that other children experience on the same servers, keeping Discord safer.

- Get parental controls: Parental control software, like Norton Family, can help you block content and limit screen time. If you don’t want your child to use Discord when they should be doing homework, Norton Family can help you control when they have access to specific sites.

- Get antivirus software: Good antivirus software can help block different types of malware that infect your computer and steal sensitive information. Tools like Norton 360 Deluxe can help protect your devices from malware that your children might accidentally download, as well as alert you to cleverly concealed scams.

Given the safety concerns, some parents choose to block Discord altogether. After all, social media platforms like Discord will always have an inherent risk. The dangers are often greater for kids who don’t know what to look out for. Keeping our children safe online involves educating them about these risks — even if this means sharing personal and sometimes scary stories of our own experiences online.

Help ensure your kids are safer online

Social media can expose children to cyberbullying, inappropriate content, and other digital threats. But Norton Family helps you safeguard their online experience by filtering harmful websites, managing app access, and setting healthy screen time limits. With a little help from the most trusted brand in consumer Cyber Safety, you can keep your family safer as they browse.

FAQs

Why don’t parents like Discord?

Some parents are wary of Discord because its open chat rooms and invite-based servers can expose kids to online bullying, inappropriate content, or contact with strangers. The platform’s mix of public and private spaces makes it harder to monitor, and the ability to send direct messages and join large communities increases the risk of encountering harmful behavior.

Is Discord secure for sharing documents and private conversations?

Discord is not the most secure platform for sharing sensitive information or private conversations. While direct messages aren’t publicly visible, Discord doesn’t employ end-to-end encryption like secure messaging platforms, meaning the company can technically access your messages, and your data could be vulnerable in the event of a breach.

Do the police monitor Discord?

There's nothing preventing law enforcement from joining servers, observing activity, or gathering publicly available information. Additionally, Discord works with law enforcement by providing information when requested via official channels. This might include personal chats, user information, or server data.

Is Discord for users 17 or older?

Discord is generally designed for users aged 13 and older, rather than 17+. However, some servers are marked as age-restricted (18+) and are only accessible to users whose account profiles meet that age requirement.

Seventeen-year-olds can use Discord but may have restricted access to certain content. Discord also offers Family Center features, allowing parents to monitor aspects of their teen’s account activity until they turn 18, at which point access becomes unrestricted unless additional parental controls are in place.

Discord is a trademark of Discord Inc.

Editorial note: Our articles provide educational information for you. Our offerings may not cover or protect against every type of crime, fraud, or threat we write about. Our goal is to increase awareness about Cyber Safety. Please review complete Terms during enrollment or setup. Remember that no one can prevent all identity theft or cybercrime, and that LifeLock does not monitor all transactions at all businesses. The Norton and LifeLock brands are part of Gen Digital Inc.

Want more?

Follow us for all the latest news, tips, and updates.